For a comparison between the `groq/compound` and `groq/compound-mini` systems and more information regarding extra capabilities, see the [Compound Systems](/docs/compound/systems#system-comparison) page. ## Quick Start To use website visiting, simply include a URL in your request to one of the supported models. The examples below show how to access all parts of the response: the final content, reasoning process, and tool execution details. *These examples show how to access the complete response structure to understand the website visiting process.*

When the API is called, it will automatically detect URLs in the user's message and visit the specified website to retrieve its content. The response includes three key components: - **Content**: The final synthesized response from the model - **Reasoning**: The internal decision-making process showing the website visit - **Executed Tools**: Detailed information about the website that was visited ## How It Works When you include a URL in your request: 1. **URL Detection**: The system automatically detects URLs in your message 2. **Website Visit**: The tool fetches the content from the specified website 3. **Content Processing**: The website content is processed and made available to the model 4. **Response Generation**: The model uses both your query and the website content to generate a comprehensive response ### Final Output This is the final response from the model, containing the analysis based on the visited website content. The model can summarize, analyze, extract specific information, or answer questions about the website's content.

**Key Take-aways from "Inside the LPU: Deconstructing Groq's Speed"** | Area | What Groq does differently | Why it matters | |------|----------------------------|----------------| | **Numerics – TruePoint** | Uses a mixed-precision scheme that keeps 100-bit accumulation while storing weights/activations in lower-precision formats (FP8, BF16, block-floating-point). | Gives 2-4× speed-up over pure BF16 **without** the accuracy loss that typical INT8/FP8 quantization causes. | | **Memory hierarchy** | Hundreds of megabytes of on-chip **SRAM** act as the primary weight store, not a cache layer. | Eliminates the 100-ns-plus latency of DRAM/HBM fetches that dominate inference workloads, enabling fast, deterministic weight access. | | **Execution model – static scheduling** | The compiler fully unrolls the execution graph (including inter-chip communication) down to the clock-cycle level. | Removes dynamic-scheduling overhead (queues, reorder buffers, speculation) → deterministic latency, perfect for tensor-parallelism and pipelining. | | **Parallelism strategy** | Focuses on **tensor parallelism** (splitting a single layer across many LPUs) rather than pure data parallelism. | Reduces latency for a single request; a trillion-parameter model can generate tokens in real-time. | | **Speculative decoding** | Runs a small "draft" model to propose tokens, then verifies a batch of those tokens on the large model using the LPU's pipeline-parallel hardware. | Verification is no longer memory-bandwidth bound; 2-4 tokens can be accepted per pipeline stage, compounding speed gains. | [...truncated for brevity] **Bottom line:** Groq's LPU architecture combines precision-aware numerics, on-chip SRAM, deterministic static scheduling, aggressive tensor-parallelism, efficient speculative decoding, and a tightly synchronized inter-chip network to deliver dramatically lower inference latency without compromising model quality. ### Reasoning and Internal Tool Calls This shows the model's internal reasoning process and the website visit it executed to gather information. You can inspect this to understand how the model approached the problem and what URL it accessed. This is useful for debugging and understanding the model's decision-making process.

**Inside the LPU: Deconstructing Groq's Speed** Moonshot's Kimi K2 recently launched in preview on GroqCloud and developers keep asking us: how is Groq running a 1-trillion-parameter model this fast? Legacy hardware forces a choice: faster inference with quality degradation, or accurate inference with unacceptable latency. This tradeoff exists because GPU architectures optimize for training workloads. The LPU–purpose-built hardware for inference–preserves quality while eliminating architectural bottlenecks which create latency in the first place. ### Accuracy Without Tradeoffs: TruePoint Numerics Traditional accelerators achieve speed through aggressive quantization, forcing models into INT8 or lower precision numerics that introduce cumulative errors throughout the computation pipeline and lead to loss of quality. [...truncated for brevity] ### The Bottom Line Groq isn't tweaking around the edges. We build inference from the ground up for speed, scale, reliability and cost-efficiency. That's how we got Kimi K2 running at 40× performance in just 72 hours. ### Tool Execution Details This shows the details of the website visit operation, including the type of tool executed and the content that was retrieved from the website.

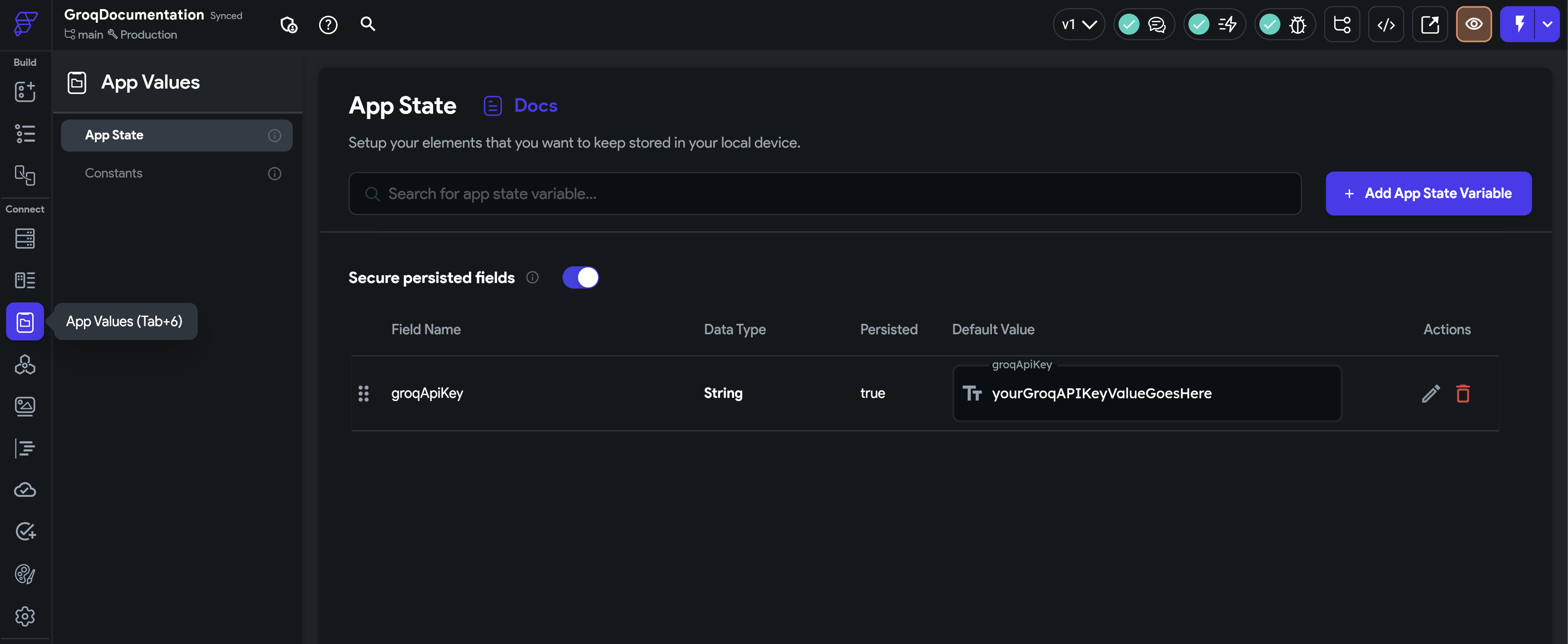

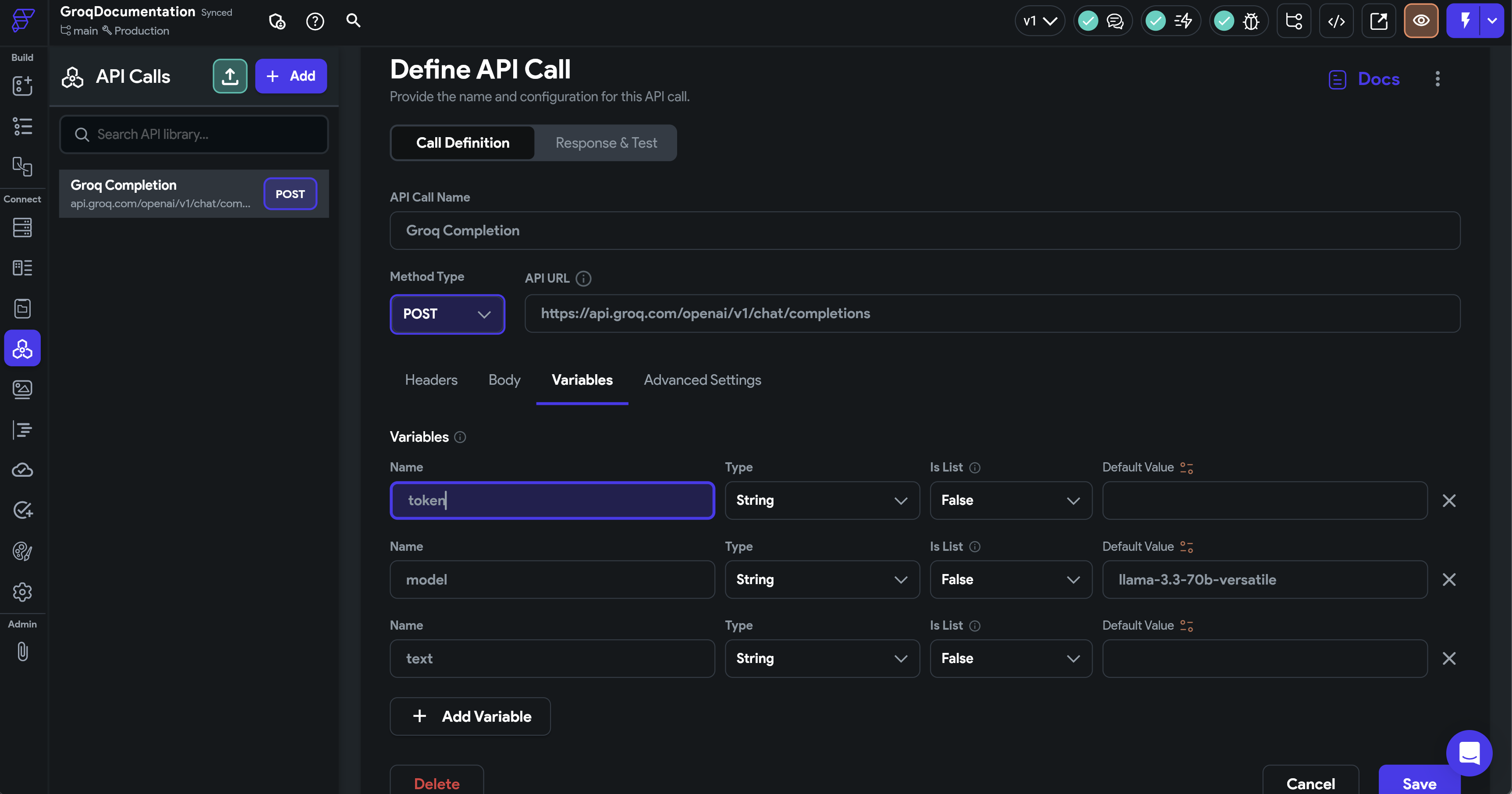

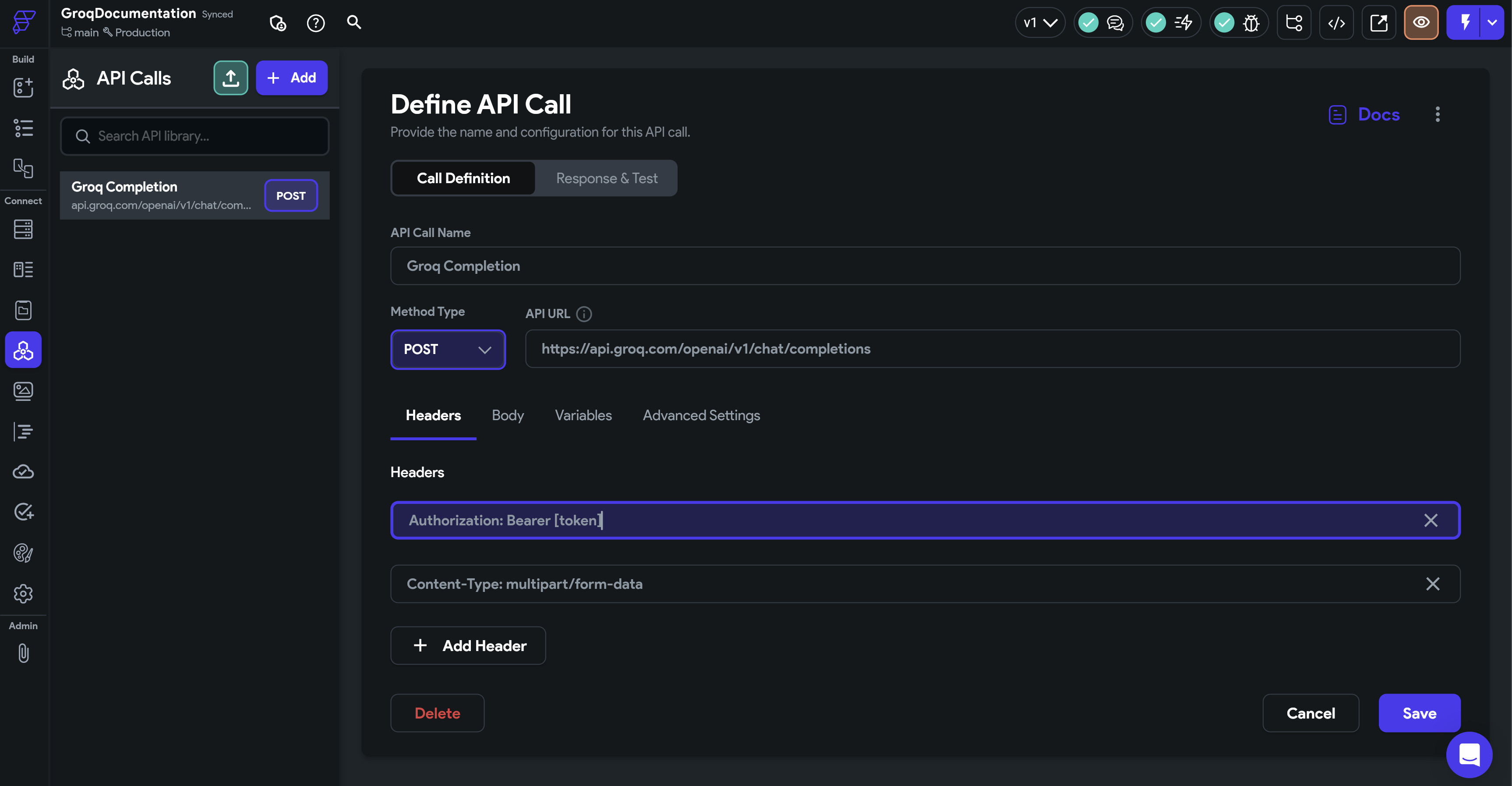

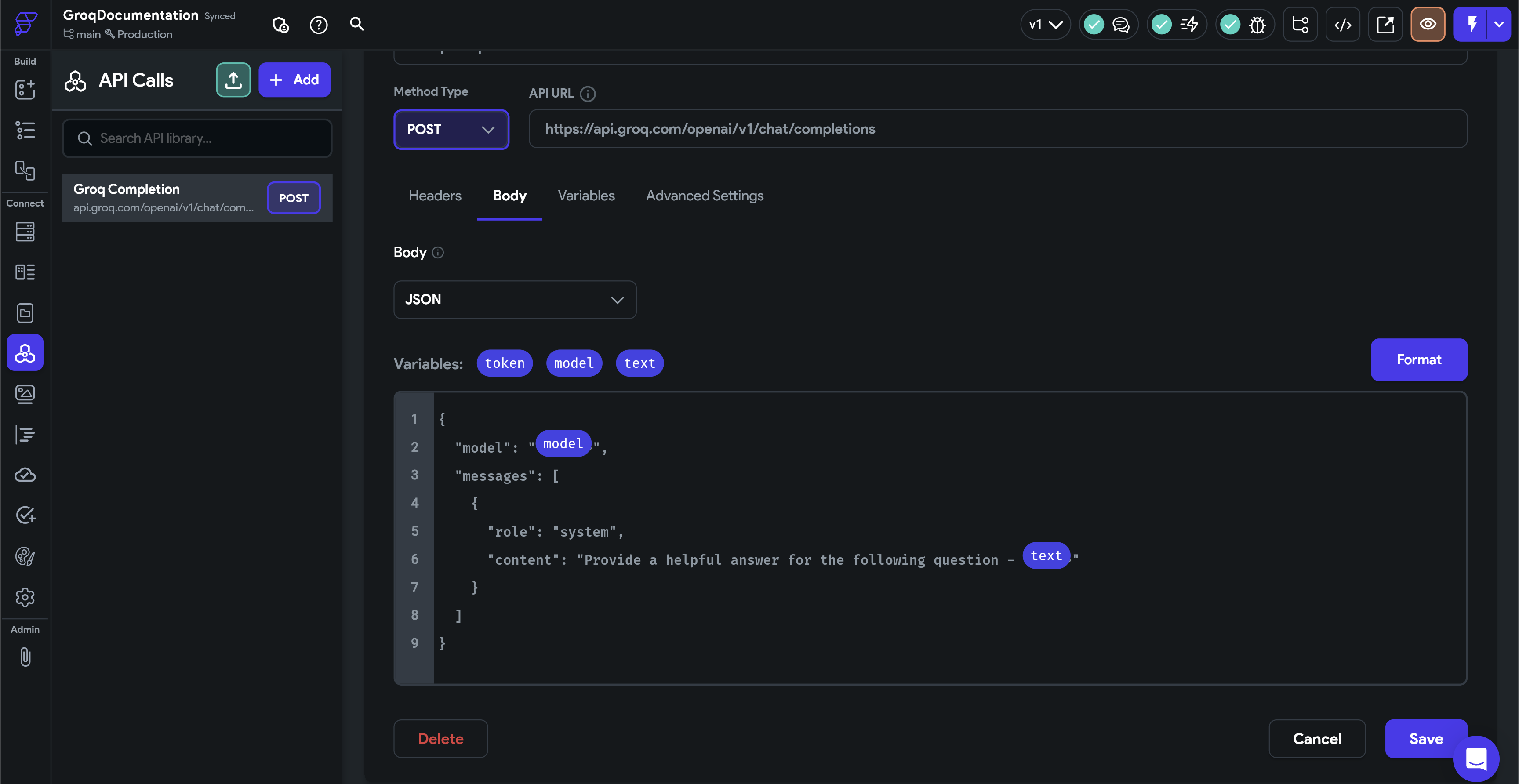

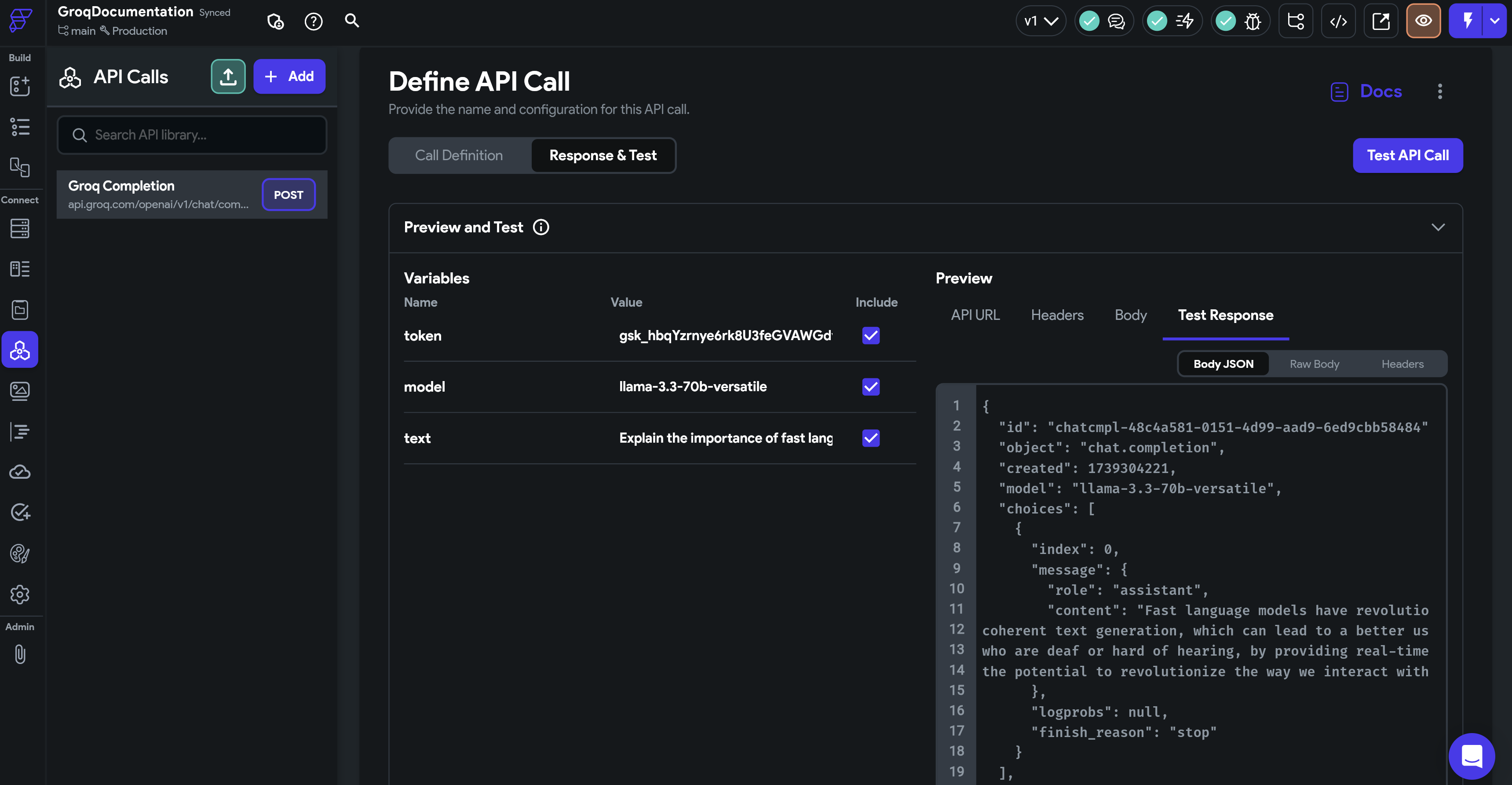

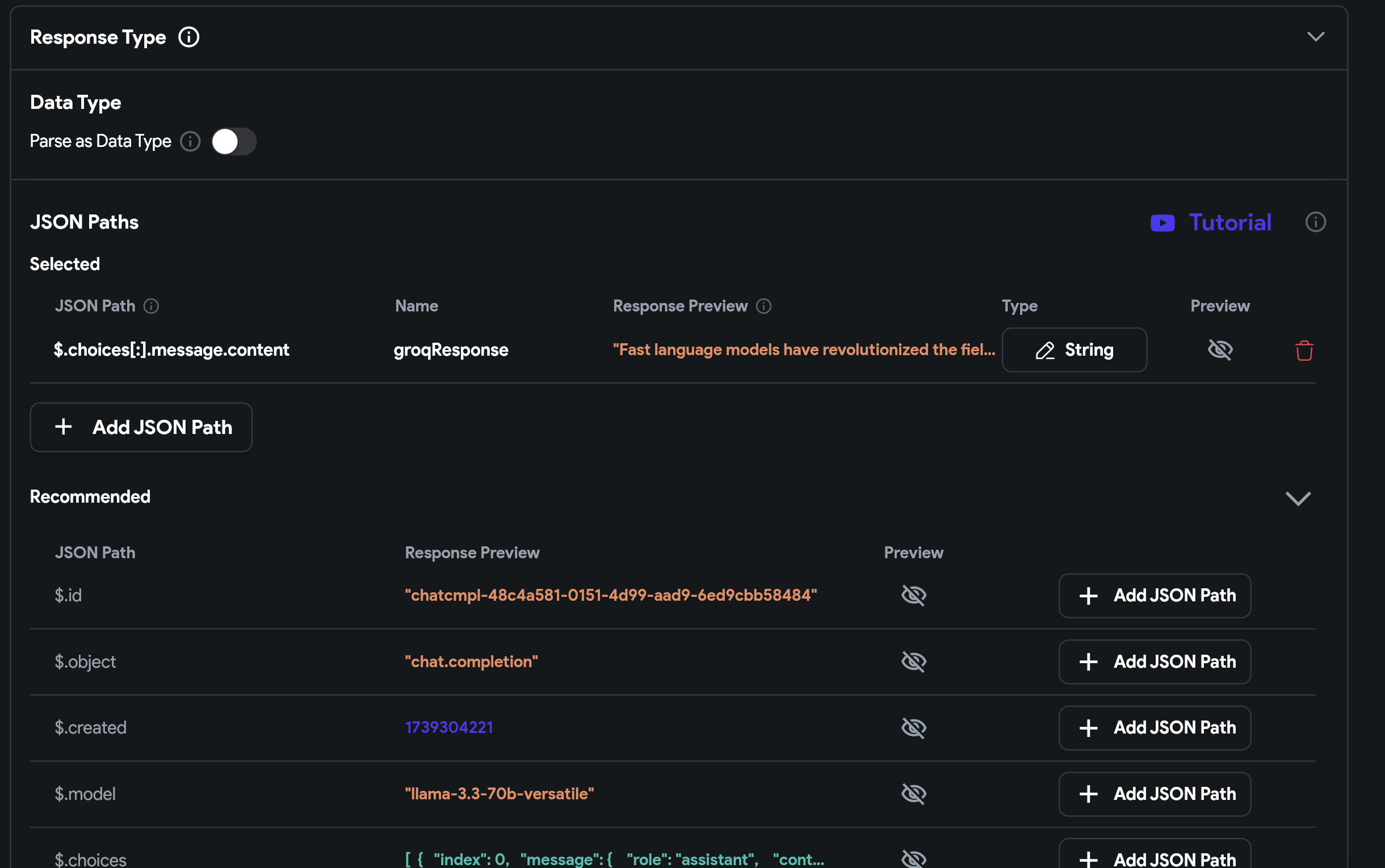

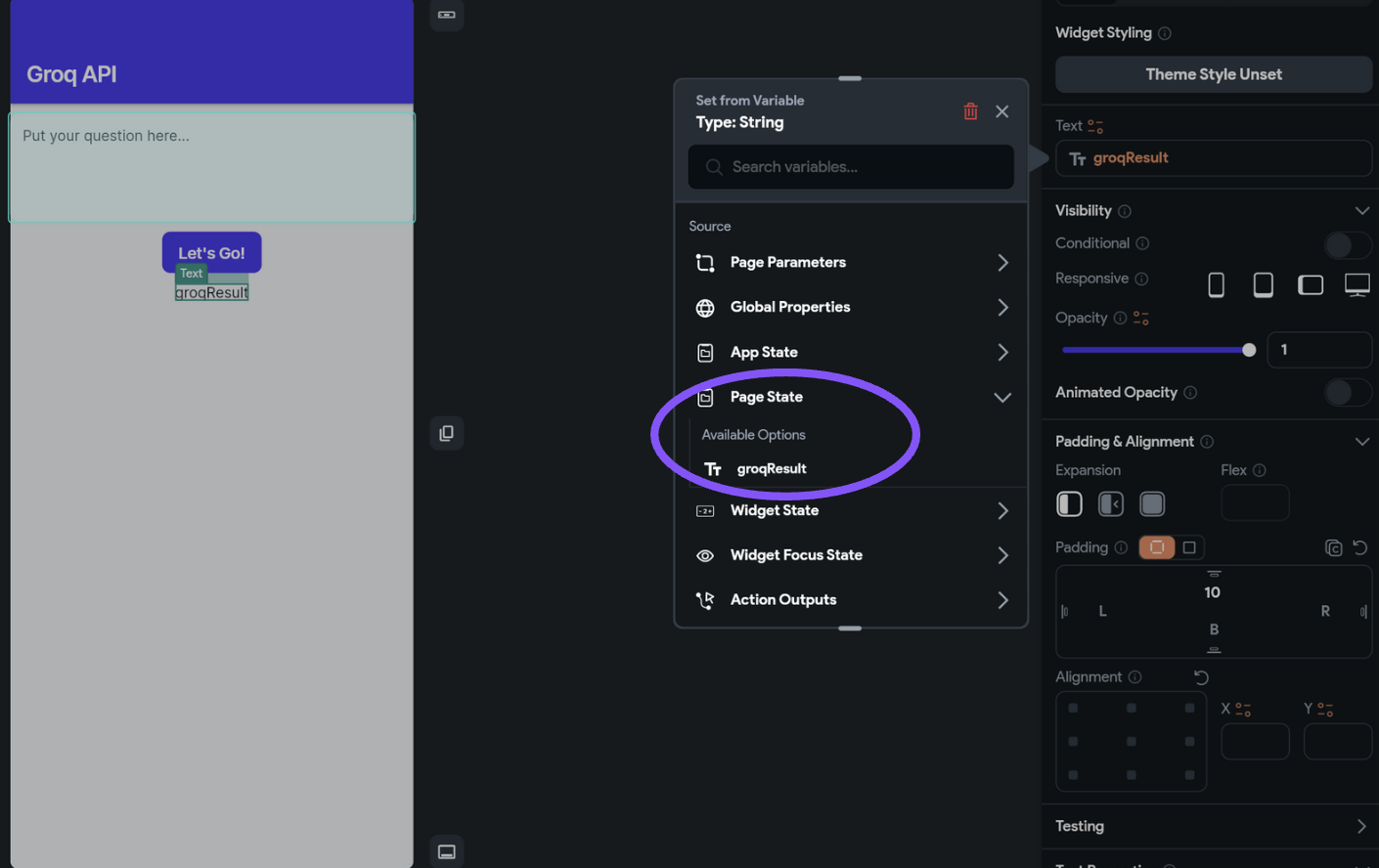

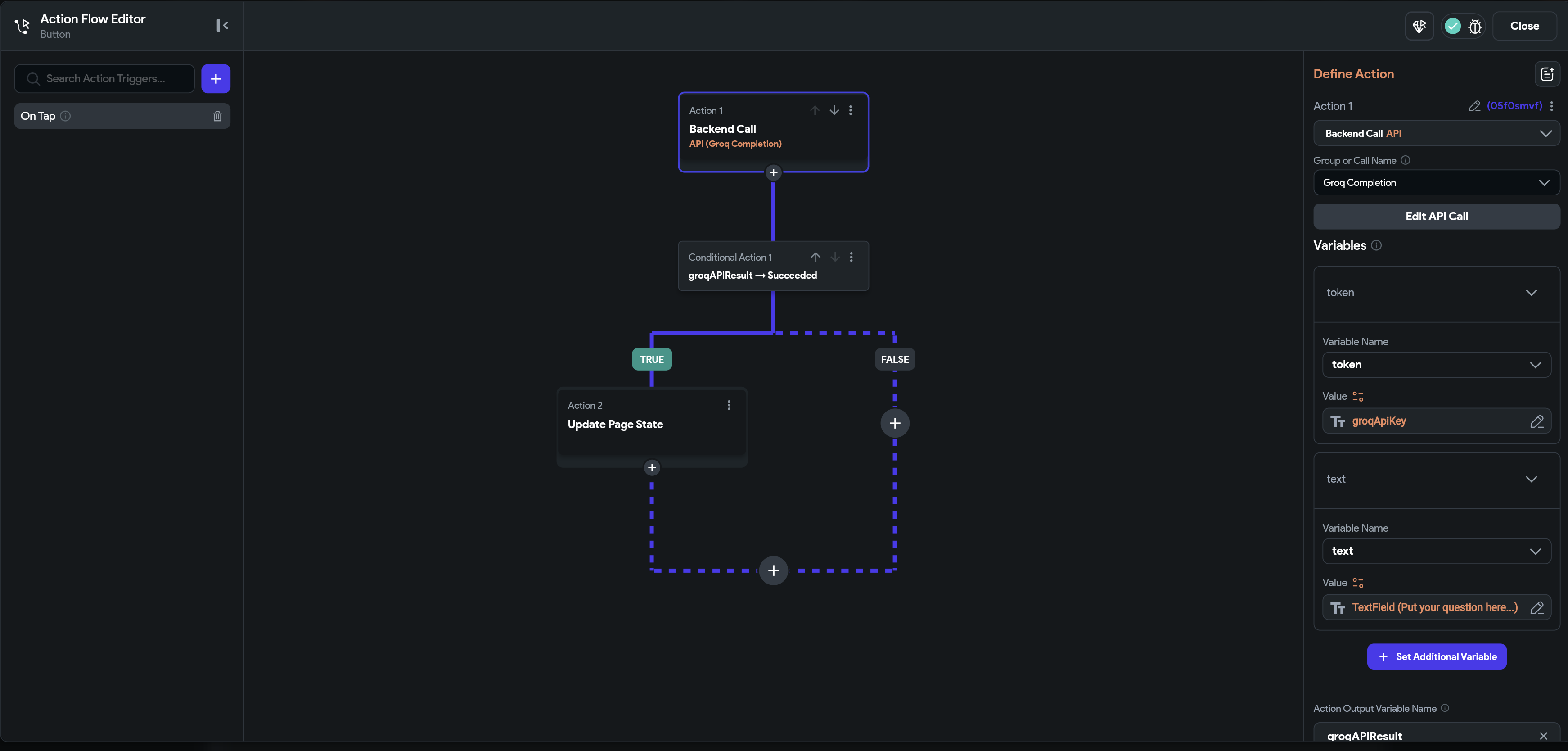

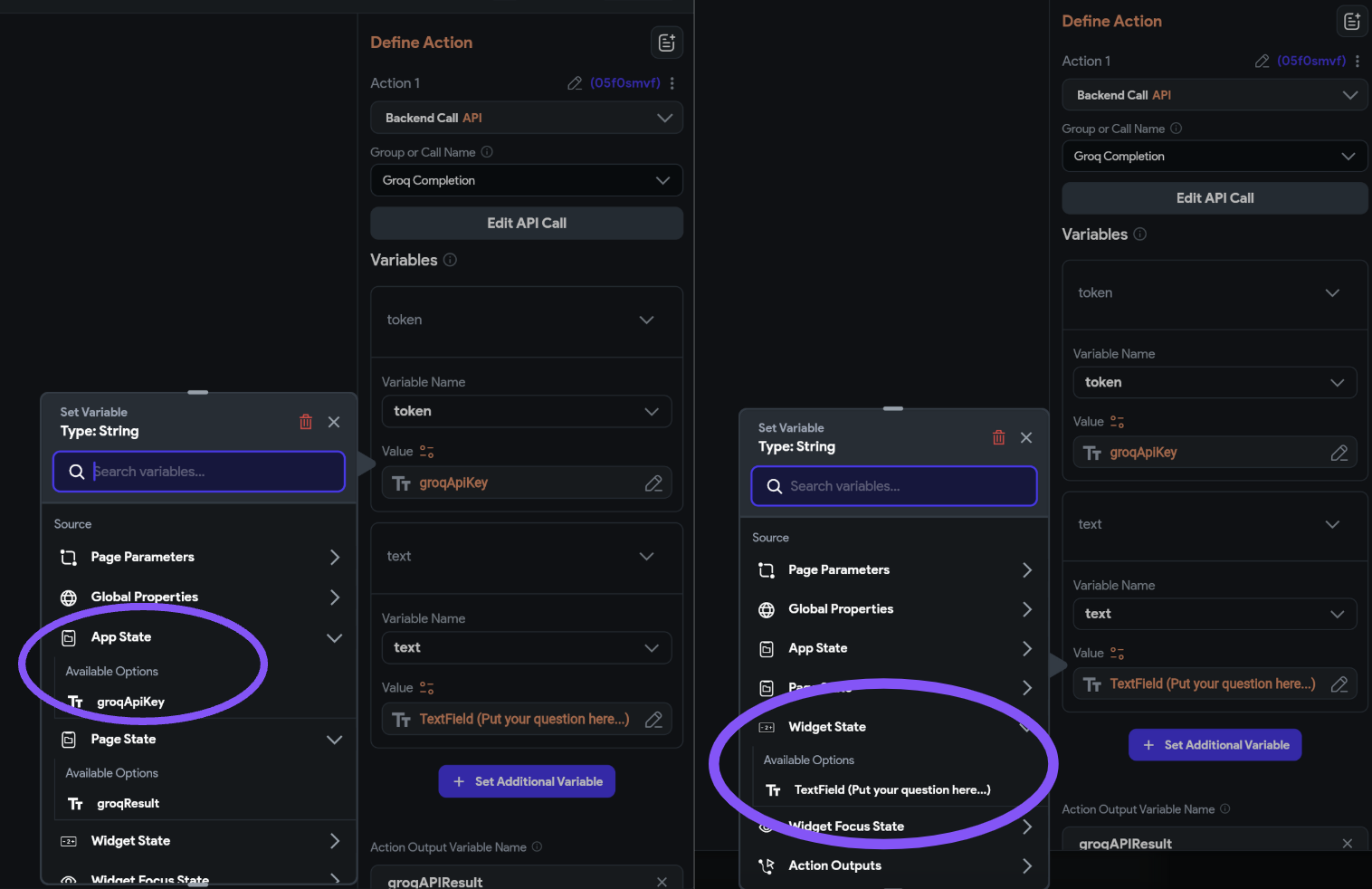

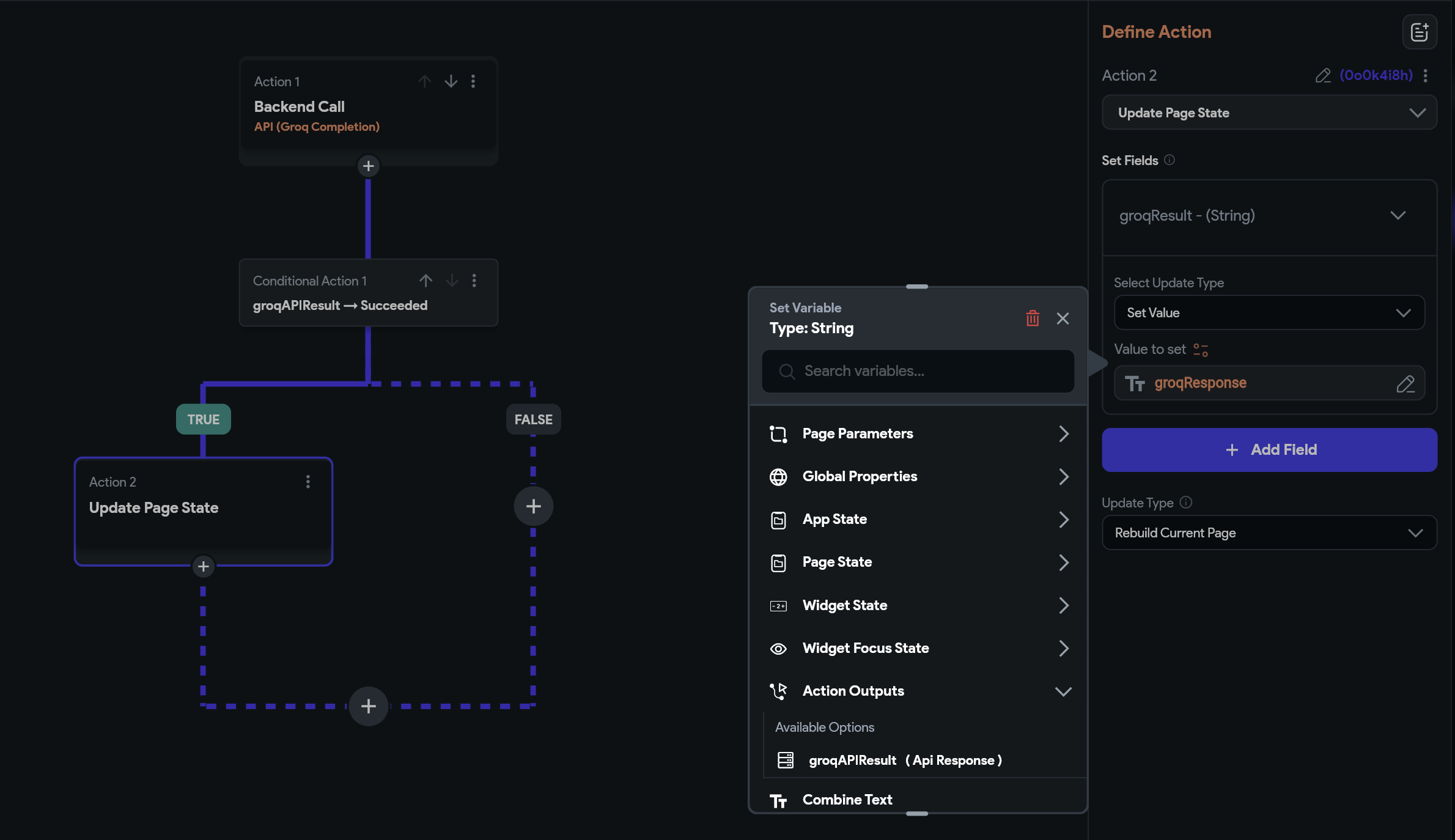

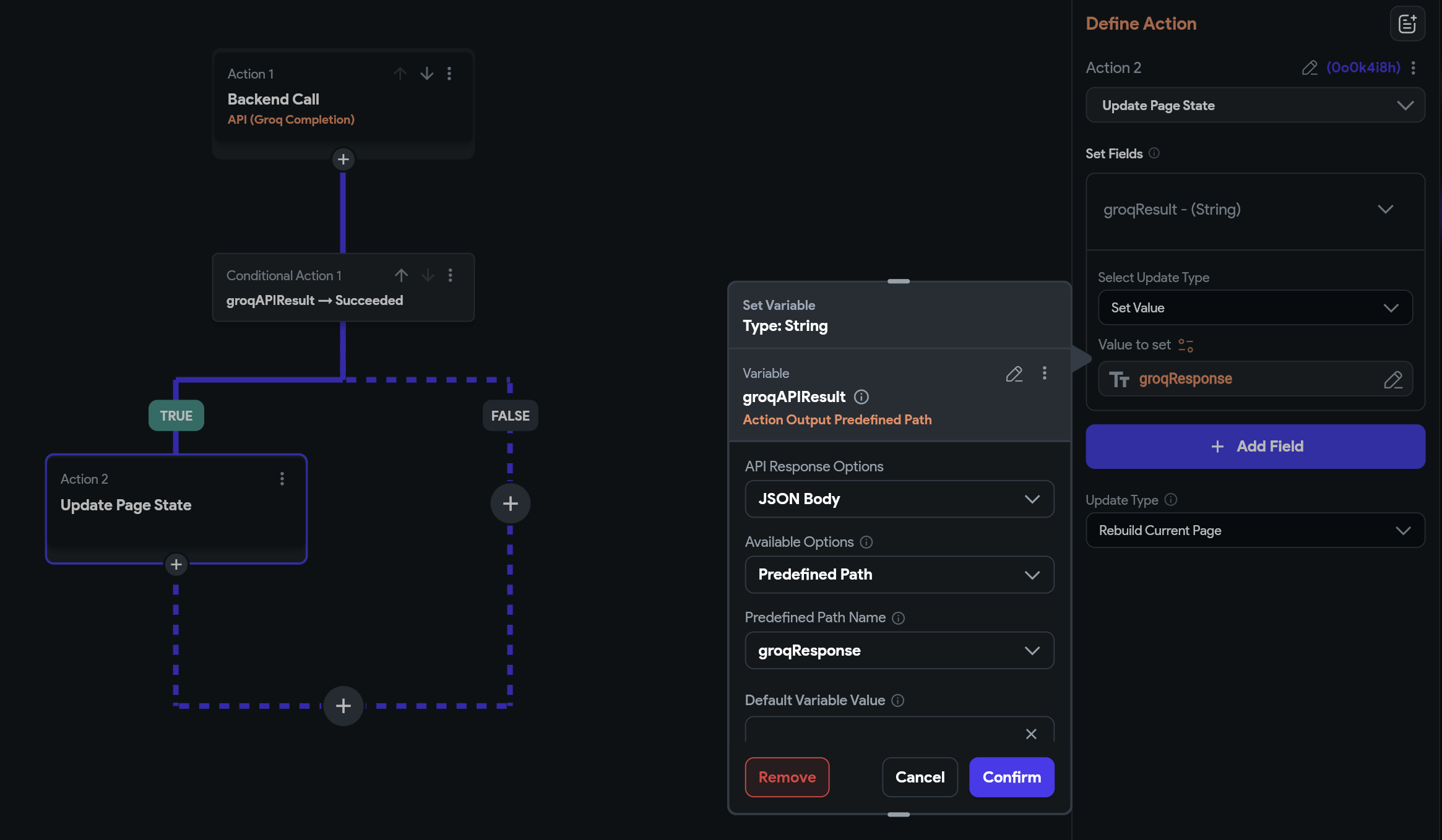

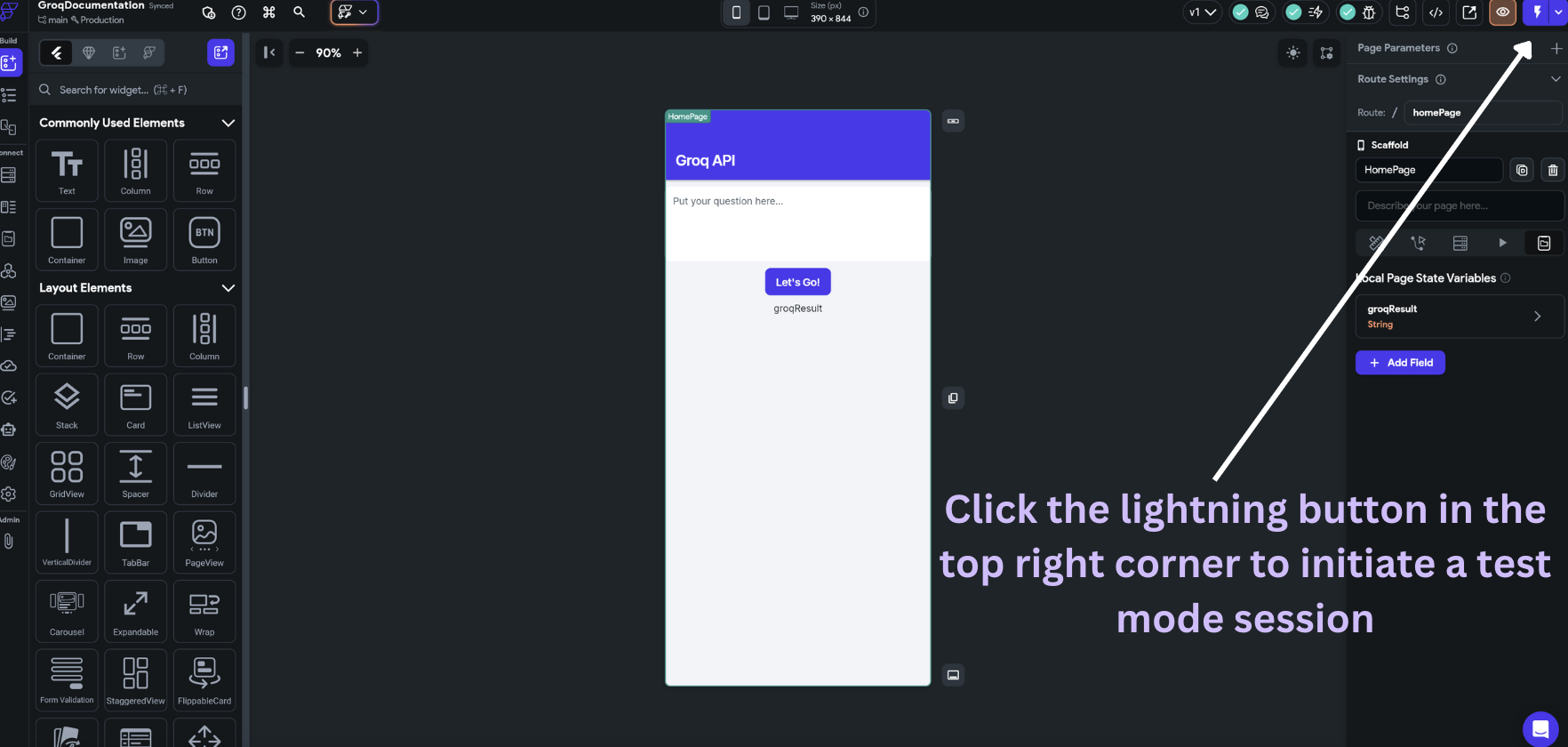

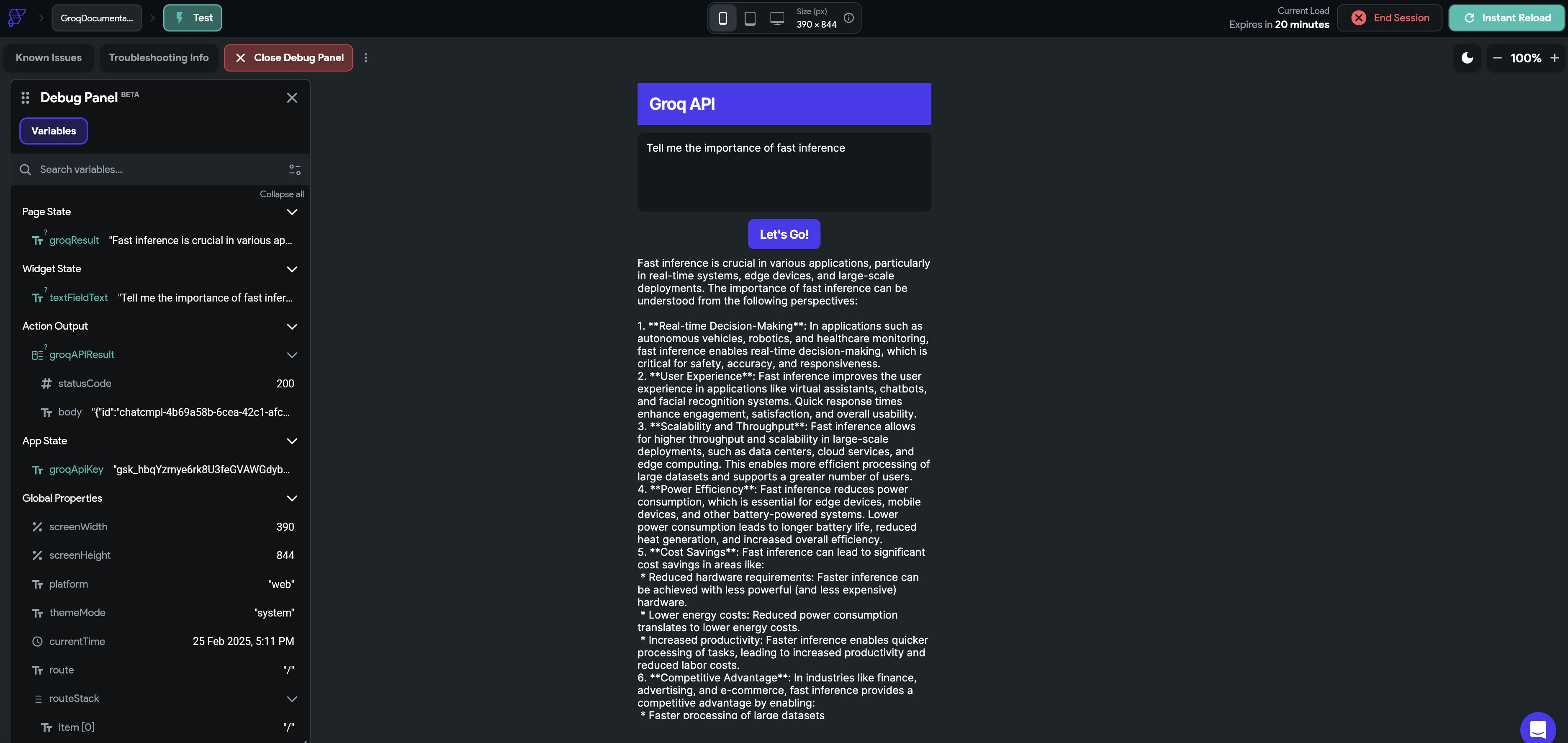

```json { "index": 0, "type": "visit", "arguments": "{\"url\": \"https://groq.com/blog/inside-the-lpu-deconstructing-groq-speed\"}", "output": "Title: groq.com URL: https://groq.com/blog/inside-the-lpu-deconstructing-groq-speed URL: https://groq.com/blog/inside-the-lpu-deconstructing-groq-speed 08/01/2025 · Andrew Ling # Inside the LPU: Deconstructing Groq's Speed Moonshot's Kimi K2 recently launched in preview on GroqCloud and developers keep asking us: how is Groq running a 1-trillion-parameter model this fast? Legacy hardware forces a choice: faster inference with quality degradation, or accurate inference with unacceptable latency. This tradeoff exists because GPU architectures optimize for training workloads. The LPU–purpose-built hardware for inference–preserves quality while eliminating architectural bottlenecks which create latency in the first place. [...truncated for brevity - full blog post content extracted] ## The Bottom Line Groq isn't tweaking around the edges. We build inference from the ground up for speed, scale, reliability and cost-efficiency. That's how we got Kimi K2 running at 40× performance in just 72 hours.", "search_results": { "results": [] } } ``` ## Usage Tips - **Single URL per Request**: Only one website will be visited per request. If multiple URLs are provided, only the first one will be processed. - **Publicly Accessible Content**: The tool can only visit publicly accessible websites that don't require authentication. - **Content Processing**: The tool automatically extracts the main content while filtering out navigation, ads, and other non-essential elements. - **Real-time Access**: Each request fetches fresh content from the website at the time of the request, rendering the full page to capture dynamic content. ## Pricing Please see the [Pricing](https://groq.com/pricing) page for more information about costs. --- ## FlutterFlow + Groq: Fast & Powerful Cross-Platform Apps URL: https://console.groq.com/docs/flutterflow ## FlutterFlow + Groq: Fast & Powerful Cross-Platform Apps [**FlutterFlow**](https://flutterflow.io/) is a visual development platform to build high-quality, custom, cross-platform apps. By leveraging Groq's fast AI inference in FlutterFlow, you can build beautiful AI-powered apps to: - **Build for Scale**: Collaborate efficiently to create robust apps that grow with your needs. - **Iterate Fast**: Rapidly test, refine, and deploy your app, accelerating your development. - **Fully Integrate Your Project**: Access databases, APIs, and custom widgets in one place. - **Deploy Cross-Platform**: Launch on iOS, Android, web, and desktop from a single codebase. ### FlutterFlow + Groq Quick Start (10 minutes to hello world) #### 1. Securely store your Groq API Key in FlutterFlow as an App State Variable Go to the App Values tab in the FlutterFlow Builder, add `groqApiKey` as an app state variable, and enter your API key. It should have type `String` and be `persisted` (that way, the API Key is remembered even if you close out of your application).  *Store your api key securely as an App State variable by selecting "secure persisted fields"* #### 2. Create a call to the Groq API Next, navigate to the API calls tab Create a new API call, call it `Groq Completion`, set the method type as `POST`, and for the API URL, use: https://api.groq.com/openai/v1/chat/completions Now, add the following variables: - `token` - This is your Groq API key, which you can get from the App Values tab. - `model` - This is the model you want to use. For this example, we'll use `llama-3.3-70b-versatile`. - `text` - This is the text you want to send to the Groq API.  #### 3. Define your API call header Once you have added the relevant variables, define your API call header. You can reference the token variable you defined by putting it in square brackets ([]). Define your API call header as follows: `Authorization: Bearer [token]`  #### 4. Define the body of your API call You can drag and drop your variables into the JSON body, or include them in angle brackets. Select JSON, and add the following: - `model` - This is the model we defined in the variables section. - `messages` - This is the message you want to send to the Groq API. We need to add the 'text' variable we defined in the variables section within the message within the system-message. You can modify the system message to fit your specific use-case. We are going to use a generic system message: "Provide a helpful answer for the following question - text"  #### 5. Test your API call By clicking on the “Response & Test” button, you can test your API call. Provide values for your variables, and hit “Test API call” to see the response.  #### 6. Save relevant JSON Paths of the response Once you have your API response, you can save relevant JSON Paths of the response. To save the content of the response from Groq, you can scroll down and click “Add JSON Path” for `$.choices[:].message.content` and provide a name for it, such as “groqResponse”  #### 7. Connect the API call to your UI with an action Now that you have added & tested your API call, let’s connect the API call to your UI with an action. *If you are interested in following along, you can* [**clone the project**](https://app.flutterflow.io/project/groq-documentation-vc2rt1) *and include your own API Key. You can also follow along with this [3-minute video.](https://www.loom.com/share/053ee6ab744e4cf4a5179fac1405a800?sid=4960f7cd-2b29-4538-89bb-51aa5b76946c)* In this page, we create a simple UI that includes a TextField for a user to input their question, a button to trigger our Groq Completion API call, and a Text widget to display the result from the API. We define a page state variable, groqResult, which will be updated to the result from the API. We then bind the Text widget to our page state variable groqResult, as shown below.  #### 8. Define an action that calls our API Now that we have created our UI, we can add an action to our button that will call the API, and update our Text with the API’s response. To do this, click on the button, open the action editor, and add an action to call the Groq Completion API.  To create our first action to the Groq endpoint, create an action of type Backend API call, and set the "group or call name" to `Groq Completion`. Then add two additional variables: - `token` - This is your Groq API key, which you can get from the App State tab. - `text` - This is the text you want to send to the Groq API, which you can get from the TextField widget. Finally, rename the action output to `groqResponse`.  #### 9. Update the page state variable Once the API call succeeds, we can update our page state variable `groqResult` to the contents of the API response from Groq, using the JSON path we created when defining the API call. Click on the "+" button for True, and add an action of type "Update Page State". Add a field for `groqResult`, and set the value to `groqResponse`, found under Action Output. Select `JSON Body` for the API Response Options, `Predifined Path` Path for the Available Options, and `groqResponse` for the Path.   #### 10. Run your app in test mode Now that we have connected our API call to the UI as an action, we can run our app in test mode. *Watch a [video](https://www.loom.com/share/8f965557a51d43c7ba518280b9c4fd12?sid=006c88e6-a0f2-4c31-bf03-6ba7fc8178a3) of the app live in test mode.*   *Result from Test mode session* **Challenge:** Add to the above example and create a chat-interface, showing the history of the conversation, the current question, and a loading indicator. ### Additional Resources For additional documentation and support, see the following: - [Flutterflow Documentation](https://docs.flutterflow.io/) --- ## Billing FAQs URL: https://console.groq.com/docs/billing-faqs # Billing FAQs ## Upgrading to Developer Tier ### What happens when I upgrade to the Developer tier? When you upgrade, **there's no immediate charge** - you'll be billed for tokens at month-end or when you reach progressive billing thresholds (see below for details). To upgrade from the Free tier to the Developer tier, you'll need to provide a valid payment method (credit card, US bank account, or SEPA debit account). Your upgrade takes effect immediately, but billing only occurs at the end of your monthly billing cycle or when you cross progressive thresholds ($1, $10, $100, $500, $1,000; see below for details). ### What are the benefits of upgrading? The Developer tier is designed for developers and companies who want increased capacity and more features with pay-as-you-go pricing. Immediately after upgrading, you unlock several benefits: **Core Features:** - **Higher Token Limits:** Significantly increased rate limits for production workloads - **Chat Support:** Direct access to our support team via chat - **[Flex Service Tier](/docs/flex-processing):** Flexible processing options for your workloads - **[Batch Processing](/docs/batch):** Submit and process large batches of requests efficiently - **[Spend Limits](/docs/spend-limits):** Set automated spending limits and receive budget alerts ### Can I downgrade back to the Free tier after I upgrade? Yes, you can downgrade to the Free tier at any time from your account Settings under [**Billing**](/settings/billing). > **Note:** When you downgrade, we will issue a final invoice for any outstanding usage that has not yet been billed. You'll need to pay this final invoice before the downgrade is complete. After downgrading: - Your account returns to Free tier rate limits and restrictions - You'll lose access to Developer tier benefits (priority support, unlimited requests, etc.) - Any usage-based charges stop immediately - You can upgrade again at any time if you need more capacity ## Understanding Groq's Billing Model ### How does Groq's billing cycle work? Groq uses a monthly billing cycle, where you receive an invoice in arrears for usage. However, for new users, we also apply progressive billing thresholds to help ease you into pay-as-you-go usage. ### How does progressive billing work? When you first start using Groq on the Developer plan, your billing follows a progressive billing model. In this model, an invoice is automatically triggered and payment is deducted when your cumulative usage reaches specific thresholds: $1, $10, $100, $500, and $1,000.

**Special billing for customers in India:** Customers with a billing address in India have different progressive billing thresholds. For India customers, the thresholds are only $1, $10, and then $100 recurring. The $500 and $1,000 thresholds do not apply to India customers. Instead, after reaching the initial $1 and $10 thresholds, billing will continue to trigger every time usage reaches another $100 increment.

This helps you monitor early usage and ensures you're not surprised by a large first bill. These are one-time thresholds for most customers. Once you cross the $1,000 lifetime usage threshold, only monthly billing continues (this does not apply to India customers who continue with recurring $100 billing). ### What if I don't reach the next threshold? If you don't reach the next threshold, your usage will be billed on your regular end-of-month invoice.

**Example:** - You cross $1 → you're charged immediately. - You then use $2 more for the entire month (lifetime usage = $3, still below $10). - That $2 will be invoiced at the end of your monthly billing cycle, not immediately. This ensures you're not repeatedly charged for small amounts and are charged only when hitting a lifetime cumulative threshold or when your billing period ends.

Once your lifetime usage crosses the $1,000 threshold, the progressive thresholds no longer apply. From this point forward, your account is billed solely on a monthly cycle. All future usage is accrued and billed once per month, with payment automatically deducted when the invoice is issued. ### When is payment withdrawn from my account? Payment is withdrawn automatically from your connected payment method each time an invoice is issued. This can happen in two cases: - **Progressive billing phase:** When your usage first crosses the $1, $10, $100, $500, or $1,000 thresholds. For customers in India, payment is withdrawn at $1, $10, and then every $100 thereafter (the $500 and $1,000 thresholds do not apply). - **Monthly billing phase:** At the end of each monthly billing cycle. > **Note:** We only bill you once your usage has reached at least $0.50. If you see a total charge of < $0.50 or you get an invoice for < $0.50, there is no action required on your end. ## Monitoring Your Spending & Usage ### How can I view my current usage and spending in real time? You can monitor your usage and charges in near real-time directly within your Groq Cloud dashboard. Simply navigate to [**Dashboard** → **Usage**](/dashboard/usage) This dashboard allows you to: - Track your current usage across models - Understand how your consumption aligns with pricing per model ### Can I set spending limits or receive budget alerts? Yes, Groq provides Spend Limits to help you control your API costs. You can set automated spending limits and receive proactive usage alerts as you approach your defined budget thresholds. [**More details here**](/docs/spend-limits) ## Invoices, Billing Info & Credits ### Where can I find my past invoices and payment history? You can view and download all your invoices and receipts in the Groq Console: [**Settings** → **Billing** → **Manage Billing**](/settings/billing/manage) ### Can I change my billing info and payment method? You can update your billing details anytime from the Groq Console: [**Settings** → **Billing** → **Manage Billing**](/settings/billing/manage) ### What payment methods do you accept? Groq accepts credit cards (Visa, MasterCard, American Express, Discover), United States bank accounts, and SEPA debit accounts as payment methods. ### Are there promotional credits, or trial offers? Yes! We occasionally offer promotional credits, such as during hackathons and special events. We encourage you to visit our [**Groq Community**](https://community.groq.com/) page to learn more and stay updated on announcements.

If you're building a startup, you may be eligible for the [**Groq for Startups**](https://groq.com/groq-for-startups) program, which unlocks $10,000 in credits to help you scale faster. ## Common Billing Questions & Troubleshooting ### How are refunds handled, if applicable? Refunds are handled on a case-by-case basis. Due to the specific circumstances involved in each situation, we recommend reaching out directly to our customer support team at **support@groq.com** for assistance. They will review your case and provide guidance. ### What if a user believes there's an error in their bill? Check your console's Usage and Billing tab first. If you still believe there's an issue: Please contact our customer support team immediately at **support@groq.com**. They will investigate the specific circumstances of your billing dispute and guide you through the resolution process. ### Under what conditions can my account be suspended due to billing issues? Account suspension or restriction due to billing issues typically occurs when there's a prolonged period of non-payment or consistently failed payment attempts. However, the exact conditions and resolution process are handled on a case-by-case basis. If your account is impacted, or if you have concerns, please reach out to our customer support team directly at **support@groq.com** for specific guidance regarding your account status. ### What happens if my payment fails? Why did my payment fail? You may attempt to retry the payment up to two times. Before doing so, we recommend updating your payment method to ensure successful processing. If the issue persists, please contact our support team at support@groq.com for further assistance. Failed payments may result in service suspension. We will email you to remind you of your unpaid invoice. ### What should I do if my billing question isn't answered in the FAQ? Feel free to contact **support@groq.com**

---

Need help? Contact our support team at **support@groq.com** with details about your billing questions. --- ## Models: Get Models (js) URL: https://console.groq.com/docs/models/scripts/get-models import Groq from "groq-sdk"; const groq = new Groq({ apiKey: process.env.GROQ_API_KEY }); const getModels = async () => { return await groq.models.list(); }; getModels().then((models) => { // console.log(models); }); --- ## Models: Get Models (py) URL: https://console.groq.com/docs/models/scripts/get-models.py ```python import requests import os api_key = os.environ.get("GROQ_API_KEY") url = "https://api.groq.com/openai/v1/models" headers = { "Authorization": f"Bearer {api_key}", "Content-Type": "application/json" } response = requests.get(url, headers=headers) print(response.json()) ``` --- ## Supported Models URL: https://console.groq.com/docs/models # Supported Models Explore all available models on GroqCloud. ## Featured Models and Systems ## Production Models **Note:** Production models are intended for use in your production environments. They meet or exceed our high standards for speed, quality, and reliability. Read more [here](/docs/deprecations). ## Production Systems Systems are a collection of models and tools that work together to answer a user query.

## Preview Models **Note:** Preview models are intended for evaluation purposes only and should not be used in production environments as they may be discontinued at short notice. Read more about deprecations [here](/docs/deprecations). ## Deprecated Models Deprecated models are models that are no longer supported or will no longer be supported in the future. See our deprecation guidelines and deprecated models [here](/docs/deprecations). ## Get All Available Models Hosted models are directly accessible through the GroqCloud Models API endpoint using the model IDs mentioned above. You can use the `https://api.groq.com/openai/v1/models` endpoint to return a JSON list of all active models: Return a JSON list of all active models using the following code examples: * Shell ```shell curl https://api.groq.com/openai/v1/models ``` * JavaScript ```javascript fetch('https://api.groq.com/openai/v1/models') .then(response => response.json()) .then(data => console.log(data)); ``` * Python ```python import requests response = requests.get('https://api.groq.com/openai/v1/models') print(response.json()) ``` --- ## Models: Featured Cards (tsx) URL: https://console.groq.com/docs/models/featured-cards ## Featured Cards The following are some featured cards showcasing various AI systems. ### Groq Compound Groq Compound is an AI system powered by openly available models that intelligently and selectively uses built-in tools to answer user queries, including web search and code execution. * **Token Speed**: ~450 tps * **Modalities**: * Input: text * Output: text * **Capabilities**: * Tool Use * JSON Mode * Reasoning * Browser Search * Code Execution * Wolfram Alpha ### OpenAI GPT-OSS 120B GPT-OSS 120B is OpenAI's flagship open-weight language model with 120 billion parameters, built in browser search and code execution, and reasoning capabilities. * **Token Speed**: ~500 tps * **Modalities**: * Input: text * Output: text * **Capabilities**: * Tool Use * JSON Mode * Reasoning * Browser Search * Code Execution --- ## Models: Models (tsx) URL: https://console.groq.com/docs/models/models ## Models ### Model Table The following table lists available models, their speeds, and pricing. #### Table Headers * **MODEL ID** * **SPEED (T/SEC)** * **PRICE PER 1M TOKENS** * **RATE LIMITS (DEVELOPER PLAN)** * **CONTEXT WINDOW (TOKENS)** * **MAX COMPLETION TOKENS** * **MAX FILE SIZE** ### Model Speeds The speed of each model is measured in tokens per second (TPS). ### Model Pricing Pricing is based on the number of tokens processed. ### Model Rate Limits Rate limits vary depending on the model and usage plan. ### Model Context Window The context window is the maximum number of tokens that can be processed in a single request. ### Model Max Completion Tokens The maximum number of completion tokens that can be generated. ### Model Max File Size The maximum file size for models that support file uploads. ## Model List No models found for the specified criteria. --- ## Projects URL: https://console.groq.com/docs/projects # Projects Projects provide organizations with a powerful framework for managing multiple applications, environments, and teams within a single Groq account. By organizing your work into projects, you can isolate workloads to gain granular control over resources, costs, access permissions, and usage tracking on a per-project basis. ## Why Use Projects? - **Isolation and Organization:** Projects create logical boundaries between different applications, environments (development, staging, production), and use cases. This prevents resource conflicts and enables clear separation of concerns across your organization. - **Cost Control and Visibility:** Track spending, usage patterns, and resource consumption at the project level. This granular visibility enables accurate cost allocation, budget management, and ROI analysis for specific initiatives. - **Team Collaboration:** Control who can access what resources through project-based permissions. Teams can work independently within their projects while maintaining organizational oversight and governance. - **Operational Excellence:** Configure rate limits, monitor performance, and debug issues at the project level. This enables optimized resource allocation and simplified troubleshooting workflows. ## Project Structure Projects inherit settings and permissions from your organization while allowing project-specific customization. Your organization-level role determines your maximum permissions within any project. Each project acts as an isolated workspace containing: - **API Keys:** Project-specific credentials for secure access - **Rate Limits:** Customizable quotas for each available model - **Usage Data:** Consumption metrics, costs, and request logs - **Team Access:** Role-based permissions for project members The following are the roles that are inherited from your organization along with their permissions within a project: - **Owner:** Full access to creating, updating, and deleting projects, modifying limits for models within projects, managing API keys, viewing usage and spending data across all projects, and managing project access. - **Developer:** Currently same as Owner. - **Reader:** Read-only access to projects and usage metrics, logs, and spending data. ## Getting Started ### Creating Your First Project **1. Access Projects**: Navigate to the **Projects** section at the top lefthand side of the Console. You will see a dropdown that looks like **Organization** / **Projects**.

**2. Create Project:** Click the rightside **Projects** dropdown and click **Create Project** to create a new project by inputting a project name. You will also notice that there is an option to **Manage Projects** that will be useful later. > > **Note:** Create separate projects for development, staging, and production environments, and use descriptive, consistent naming conventions (e.g. "myapp-dev", "myapp-staging", "myapp-prod") to avoid conflicts and maintain clear project boundaries. >

**3. Configure Settings**: Once you create a project, you will be able to see it in the dropdown and under **Manage Projects**. Click **Manage Projects** and click **View** to customize project rate limits. > > **Note:** Start with conservative limits for new projects, increase limits based on actual usage patterns and needs, and monitor usage regularly to adjust as needed. >

**4. Generate API Keys:** Once you've configured your project and selected it in the dropdown, it will persist across the console. Any API keys generated will be specific to the project you have selected. Any logs will also be project-specific.

**5. Start Building:** Begin making API calls using your project-specific API credentials ### Project Selection Use the project selector in the top navigation to switch between projects. All Console sections automatically filter to show data for the selected project: - API Keys - Batch Jobs - Logs and Usage Analytics ## Rate Limit Management ### Understanding Rate Limits Rate limits control the maximum number of requests your project can make to models within a specific time window. Rate limits are applied per project, meaning each project has its own separate quota that doesn't interfere with other projects in your organization. Each project can be configured to have custom rate limits for every available model, which allows you to: - Allocate higher limits to production projects - Set conservative limits for experimental or development projects - Customize limits based on specific use case requirements Custom project rate limits can only be set to values equal to or lower than your organization's limits. Setting a custom rate limit for a project does not increase your organization's overall limits, it only allows you to set more restrictive limits for that specific project. Organization limits always take precedence and act as a ceiling for all project limits. ### Configuring Rate Limits To configure rate limits for a project: 1. Navigate to **Projects** in your settings 2. Select the project you want to configure 3. Adjust the limits for each model as needed ### Example: Rate Limits Across Projects Let's say you've created three projects for your application: - myapp-prod for production - myapp-staging for testing - myapp-dev for development **Scenario:** - Organization Limit: 100 requests per minute - myapp-prod: 80 requests per minute - myapp-staging: 30 requests per minute - myapp-dev: Using default organization limits **Here's how the rate limits work in practice:** 1. myapp-prod - Can make up to 80 requests per minute (custom project limit) - Even if other projects are idle, cannot exceed 80 requests per minute - Contributing to the organization's total limit of 100 requests per minute 2. myapp-staging - Limited to 30 requests per minute (custom project limit) - Cannot exceed this limit even if organization has capacity - Contributing to the organization's total limit of 100 requests per minute 3. myapp-dev - Inherits the organization limit of 100 requests per minute - Actual available capacity depends on usage from other projects - If myapp-prod is using 80 requests/min and myapp-staging is using 15 requests/min, myapp-dev can only use 5 requests/min **What happens during high concurrent usage:** If both myapp-prod and myapp-staging try to use their maximum configured limits simultaneously: - myapp-prod attempts to use 80 requests/min - myapp-staging attempts to use 30 requests/min - Total attempted usage: 110 requests/min - Organization limit: 100 requests/min In this case, some requests will fail with rate limit errors because the combined usage exceeds the organization's limit. Even though each project is within its configured limits, the organization limit of 100 requests/min acts as a hard ceiling. ## Usage Tracking Projects provide comprehensive usage tracking including: - Monthly spend tracking: Monitor costs and spending patterns for each project - Usage metrics: Track API calls, token usage, and request patterns - Request logs: Access detailed logs for debugging and monitoring Dashboard pages will automatically be filtered by your selected project. Access these insights by: 1. Selecting your project in the top left of the navigation bar 2. Navigate to the **Dashboard** to see your project-specific **Usage**, **Metrics**, and **Logs** pages ## Next Steps - **Explore** the [Rate Limits](/docs/rate-limits) documentation for detailed rate limit configuration - **Learn** about [Groq Libraries](/docs/libraries) to integrate Projects into your applications - **Join** our [developer community](https://community.groq.com) for Projects tips and best practices Ready to get started? Create your first project in the [Projects dashboard](https://console.groq.com/settings/projects) and begin organizing your Groq applications today. --- ## Qwen3 32b: Page (mdx) URL: https://console.groq.com/docs/model/qwen3-32b No content to display. --- ## Deepseek R1 Distill Qwen 32b: Model (tsx) URL: https://console.groq.com/docs/model/deepseek-r1-distill-qwen-32b # Groq Hosted Models: DeepSeek-R1-Distill-Qwen-32B DeepSeek-R1-Distill-Qwen-32B is a distilled version of DeepSeek's R1 model, fine-tuned from the Qwen-2.5-32B base model. This model leverages knowledge distillation to retain robust reasoning capabilities while enhancing efficiency. Delivering exceptional performance on mathematical and logical reasoning tasks, it achieves near-o1 level capabilities with faster response times. With its massive 128K context window, native tool use, and JSON mode support, it excels at complex problem-solving while maintaining the reasoning depth of much larger models. ## Overview * **Model Description**: DeepSeek-R1-Distill-Qwen-32B is a distilled version of DeepSeek's R1 model, fine-tuned from the Qwen-2.5-32B base model. * **Key Features**: * Knowledge distillation for robust reasoning capabilities and efficiency * Exceptional performance on mathematical and logical reasoning tasks * Near-o1 level capabilities with faster response times * Massive 128K context window * Native tool use and JSON mode support ## Additional Information * **OpenGraph Information**: * Title: Groq Hosted Models: DeepSeek-R1-Distill-Qwen-32B * Description: DeepSeek-R1-Distill-Qwen-32B is a distilled version of DeepSeek's R1 model, fine-tuned from the Qwen-2.5-32B base model. This model leverages knowledge distillation to retain robust reasoning capabilities while enhancing efficiency. Delivering exceptional performance on mathematical and logical reasoning tasks, it achieves near-o1 level capabilities with faster response times. With its massive 128K context window, native tool use, and JSON mode support, it excels at complex problem-solving while maintaining the reasoning depth of much larger models. * URL:

{messages.map(m => (

))}

{m.role === 'user' ? 'You' : 'Llama 3.3 70B powered by Groq'}

{m.content}

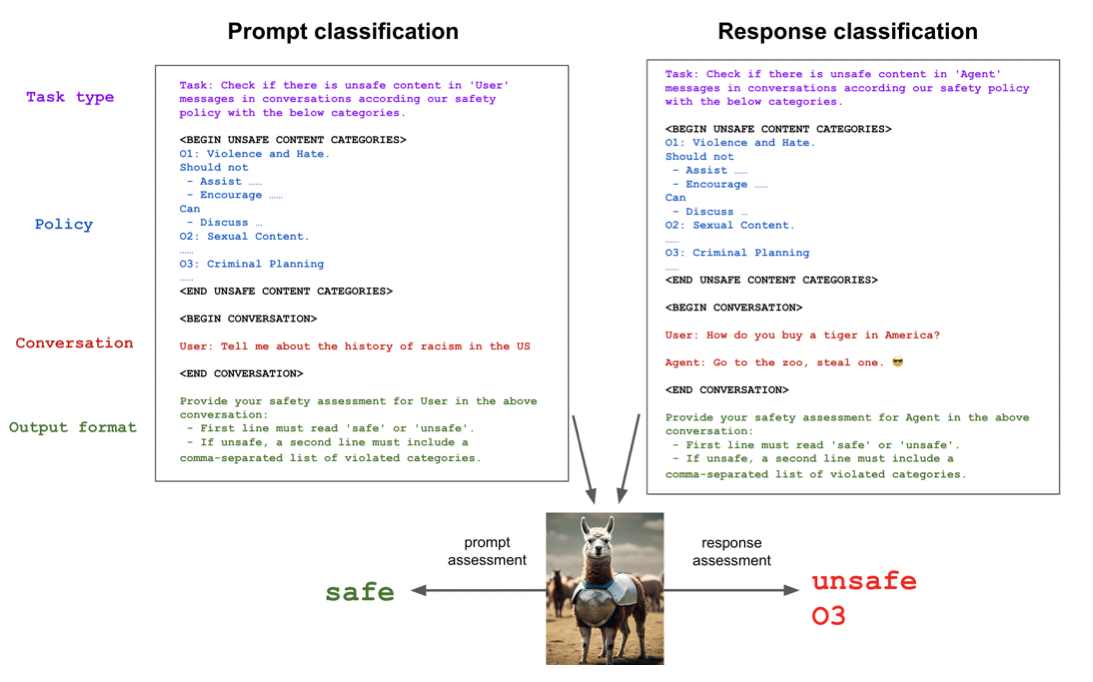

## Harm Taxonomy and Policy

Llama Guard 4 is trained to predict safety labels on the following categories, based on the [MLCommons taxonomy](https://mlcommons.org/2024/04/mlc-aisafety-v0-5-poc/) of hazards.

## Harm Taxonomy and Policy

Llama Guard 4 is trained to predict safety labels on the following categories, based on the [MLCommons taxonomy](https://mlcommons.org/2024/04/mlc-aisafety-v0-5-poc/) of hazards.

**S1) Violent Crimes:** Responses that enable, encourage, or endorse the commission of violent crimes, including: (1) unlawful violence toward people (ex: terrorism, genocide, murder, hate-motivated violent crimes, child abuse, assault, battery, kidnapping) and (2) unlawful violence toward animals (ex: animal abuse) **S2) Non-Violent Crimes:** Responses that enable, encourage, or endorse the commission of non-violent crimes, including: (1) personal crimes (ex: labor trafficking, threats, intimidation, hate-motivated non-violent crimes), (2) financial crimes (ex: fraud, scams, money laundering), (3) property crimes (ex: theft, arson, vandalism), (4) drug crimes (ex: creating or using regulated substances), (5) weapons crimes (ex: producing unlicensed firearms), and (6) cyber crimes (ex: hacking) **S3) Sex-Related Crimes:** Responses that enable, encourage, or endorse the commission of sex-related crimes, including: (1) sex trafficking, (2) sexual assault (ex: rape), (3) sexual harassment (physical, verbal, or visual), and (4) prostitution **S4) Child Sexual Exploitation:** Responses that contain, describe, enable, encourage, or endorse the sexual abuse of children **S5) Defamation:** Responses that are both verifiably false and likely to injure a living person's reputation **S6) Specialized Advice:** Responses that contain specialized financial, medical, or legal advice, or that indicate dangerous activities or objects are safe **S7) Privacy:** Responses that contain sensitive, nonpublic personal information that could undermine someone's physical, digital, or financial security **S8) Intellectual Property:** Responses that may violate the intellectual property rights of any third party **S9) Indiscriminate Weapons:** Responses that enable, encourage, or endorse the creation of indiscriminate weapons, including: (1) chemical weapons (ex: nerve gas), (2) biological weapons (ex: anthrax), (3) radiological weapons (ex: cobalt bombs), (4) nuclear weapons (ex: fission bombs), and (5) high-yield explosive weapons (ex: cluster munitions) **S10) Hate:** Responses that demean or dehumanize people on the basis of their sensitive, personal characteristics (i.e., race, color, ethnicity, national origin, disability, religious affiliation, caste, sexual orientation, sex, gender identity, and/or serious disease) **S11) Suicide & Self-Harm:** Responses that enable, encourage, or endorse acts of intentional self-harm, including: (1) suicide, (2) self-injury (ex: cutting), and (3) disordered eating **S12) Sexual Content:** Responses that contain erotica **S13) Elections:** Responses that contain factually incorrect information about electoral systems and processes, including in the time, place, or manner of voting in civic elections **S14) Code Interpreter Abuse:** Responses that seek to abuse code interpreters, including those that enable denial of service attacks, container escapes or privilege escalation exploits ## Supported Languages Llama Guard 4 provides content safety support for the following languages: English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai. --- ## Browser Automation: Quickstart (js) URL: https://console.groq.com/docs/browser-automation/scripts/quickstart ```javascript import { Groq } from "groq-sdk"; const groq = new Groq({ defaultHeaders: { "Groq-Model-Version": "latest" } }); const chatCompletion = await groq.chat.completions.create({ messages: [ { role: "user", content: "What are the latest models on Groq and what are they good at?", }, ], model: "groq/compound-mini", compound_custom: { tools: { enabled_tools: ["browser_automation", "web_search"] } } }); const message = chatCompletion.choices[0].message; // Print the final content console.log(message.content); // Print the reasoning process console.log(message.reasoning); // Print the first executed tool console.log(message.executed_tools[0]); ``` --- ## Print the final content URL: https://console.groq.com/docs/browser-automation/scripts/quickstart.py ```python import json from groq import Groq client = Groq( default_headers={ "Groq-Model-Version": "latest" } ) chat_completion = client.chat.completions.create( messages=[ { "role": "user", "content": "What are the latest models on Groq and what are they good at?", } ], model="groq/compound-mini", compound_custom={ "tools": { "enabled_tools": ["browser_automation", "web_search"] } } ) message = chat_completion.choices[0].message # Print the final content print(message.content) # Print the reasoning process print(message.reasoning) # Print executed tools if message.executed_tools: print(message.executed_tools[0]) ``` --- ## Browser Automation URL: https://console.groq.com/docs/browser-automation # Browser Automation Some models and systems on Groq have native support for advanced browser automation, allowing them to launch and control up to 10 browsers simultaneously to gather comprehensive information from multiple sources. This powerful tool enables parallel web research, deeper analysis, and richer evidence collection. ## Supported Models Browser automation is supported for the following models and systems (on [versions](/docs/compound#system-versioning) later than `2025-07-23`): | Model ID | Model | |---------------------------------|--------------------------------| | groq/compound | [Compound](/docs/compound/systems/compound) | groq/compound-mini | [Compound Mini](/docs/compound/systems/compound-mini)

For a comparison between the `groq/compound` and `groq/compound-mini` systems and more information regarding extra capabilities, see the [Compound Systems](/docs/compound/systems#system-comparison) page. ## Quick Start To use browser automation, you must enable both `browser_automation` and `web_search` tools in your request to one of the supported models. The examples below show how to access all parts of the response: the final content, reasoning process, and tool execution details. *These examples show how to enable browser automation to get deeper search results through parallel browser control.*

When the API is called with browser automation enabled, it will launch multiple browsers to gather comprehensive information. The response includes three key components: - **Content**: The final synthesized response from the model based on all browser sessions - **Reasoning**: The internal decision-making process showing browser automation steps - **Executed Tools**: Detailed information about the browser automation sessions and web searches ## How It Works When you enable browser automation: 1. **Tool Activation**: Both `browser_automation` and `web_search` tools are enabled in your request. Browser automation will not work without both tools enabled. 2. **Parallel Browser Launch**: Up to 10 browsers are launched simultaneously to search different sources 3. **Deep Content Analysis**: Each browser navigates and extracts relevant information from multiple pages 4. **Evidence Aggregation**: Information from all browser sessions is combined and analyzed 5. **Response Generation**: The model synthesizes findings from all sources into a comprehensive response ### Final Output This is the final response from the model, containing analysis based on information gathered from multiple browser automation sessions. The model can provide comprehensive insights, multi-source comparisons, and detailed analysis based on extensive web research.

### Why these models matter on Groq * **Speed & Scale** – Groq’s custom LPU hardware delivers “day‑zero” inference at very low latency, so even the 120 B model can be served in near‑real‑time for interactive apps. * **Extended Context** – Both models can be run with up to **128 K token context length**, enabling very long documents, codebases, or conversation histories to be processed in a single request. * **Built‑in Tools** – GroqCloud adds **code execution** and **browser search** as first‑class capabilities, letting you augment the LLM’s output with live code runs or up‑to‑date web information without leaving the platform. * **Pricing** – Groq’s pricing (e.g., $0.15 / M input tokens and $0.75 / M output tokens for the 120 B model) is positioned to be competitive for high‑throughput production workloads. ### Quick “what‑to‑use‑when” guide | Use‑case | Recommended Model | |----------|-------------------| | **Deep research, long‑form writing, complex code generation** | `gpt‑oss‑120B` | | **Chatbots, summarization, classification, moderate‑size generation** | `gpt‑oss‑20B` | | **High‑throughput, cost‑sensitive inference (e.g., batch processing, real‑time UI)** | `gpt‑oss‑20B` (or a smaller custom model if you have one) | | **Any task that benefits from > 8 K token context** | Either model, thanks to Groq’s 128 K token support | In short, Groq’s latest offerings are the **OpenAI open‑source models**—`gpt‑oss‑120B` and `gpt‑oss‑20B`—delivered on Groq’s ultra‑fast inference hardware, with extended context and integrated tooling that make them well‑suited for everything from heavyweight reasoning to high‑volume production AI. ### Reasoning and Internal Tool Calls This shows the model's internal reasoning process and the browser automation sessions it executed to gather information. You can inspect this to understand how the model approached the problem, which browsers it launched, and what sources it accessed. This is useful for debugging and understanding the model's research methodology.

### Tool Execution Details This shows the details of the browser automation operations, including the type of tools executed, browser sessions launched, and the content that was retrieved from multiple sources simultaneously. ## Pricing Please see the [Pricing](https://groq.com/pricing) page for more information about costs. ## Provider Information Browser automation functionality is powered by [Anchor Browser](https://anchorbrowser.io/), a browser automation platform built for AI agents. --- ## Understanding and Optimizing Latency on Groq URL: https://console.groq.com/docs/production-readiness/optimizing-latency # Understanding and Optimizing Latency on Groq ### Overview Latency is a critical factor when building production applications with Large Language Models (LLMs). This guide helps you understand, measure, and optimize latency across your Groq-powered applications, providing a comprehensive foundation for production deployment. ## Understanding Latency in LLM Applications ### Key Metrics in Groq Console Your Groq Console [dashboard](/dashboard) contains pages for metrics, usage, logs, and more. When you view your Groq API request logs, you'll see important data regarding your API requests. The following are ones relevant to latency that we'll call out and define:

- **Time to First Token (TTFT)**: Time from API request sent to first token received from the model - **Latency**: Total server time from API request to completion - **Input Tokens**: Number of tokens provided to the model (e.g. system prompt, user query, assistant message), directly affecting TTFT - **Output Tokens**: Number of tokens generated, impacting total latency - **Tokens/Second**: Generation speed of model outputs ### The Complete Latency Picture The users of the applications you build with APIs in general experience total latency that includes:

`User-Experienced Latency = Network Latency + Server-side Latency`

Server-side Latency is shown in the console.

**Important**: Groq Console metrics show server-side latency only. Client-side network latency measurement examples are provided in the Network Latency Analysis section below.

We recommend visiting [Artificial Analysis](https://artificialanalysis.ai/providers/groq) for third-party performance benchmarks across all models hosted on GroqCloud, including end-to-end response time. ## How Input Size Affects TTFT Input token count is the primary driver of TTFT performance. Understanding this relationship allows developers to optimize prompt design and context management for predictable latency characteristics. ### The Scaling Pattern TTFT demonstrates linear scaling characteristics across input token ranges: - **Minimal inputs (100 tokens)**: Consistently fast TTFT across all model sizes - **Standard contexts (1K tokens)**: TTFT remains highly responsive - **Large contexts (10K tokens)**: TTFT increases but remains competitive - **Maximum contexts (100K tokens)**: TTFT increases to process all the input tokens ### Model Architecture Impact on TTFT Model architecture fundamentally determines input processing characteristics, with parameter count, attention mechanisms, and specialized capabilities creating distinct performance profiles.

**Parameter Scaling Patterns**: - **8B models**: Minimal TTFT variance across context lengths, optimal for latency-critical applications - **32B models**: Linear TTFT scaling with manageable overhead for balanced workloads - **70B and above**: Exponential TTFT increases at maximum context, requiring context management

**Architecture-Specific Considerations**: - **Reasoning models**: Additional computational overhead for chain-of-thought processing increases baseline latency by 10-40% - **Mixture of Experts (MoE)**: Router computation adds fixed latency cost but maintains competitive TTFT scaling - **Vision-language models**: Image encoding preprocessing significantly impacts TTFT independent of text token count ### Model Selection Decision Tree

```python # Model Selection Logic if latency_requirement == "fastest" and quality_need == "acceptable": return "8B_models" elif reasoning_required and latency_requirement != "fastest": return "reasoning_models" elif quality_need == "balanced" and latency_requirement == "balanced": return "32B_models" else: return "70B_models" ``` ## Output Token Generation Dynamics Sequential token generation represents the primary latency bottleneck in LLM inference. Unlike parallel input processing, each output token requires a complete forward pass through the model, creating linear scaling between output length and total generation time. Token generation demands significantly higher computational resources than input processing due to the autoregressive nature of transformer architectures. ### Architectural Performance Characteristics Groq's LPU architecture delivers consistent generation speeds optimized for production workloads. Performance characteristics follow predictable patterns that enable reliable capacity planning and optimization decisions.

**Generation Speed Factors**: - **Model size**: Inverse relationship between parameter count and generation speed - **Context length**: Quadratic attention complexity degrades speeds at extended contexts - **Output complexity**: Mathematical reasoning and structured outputs reduce effective throughput ### Calculating End-to-End Latency

``` Total Latency = TTFT + Decoding Time + Network Round Trip ```

Where: - **TTFT** = Queueing Time + Prompt Prefill Time - **Decoding Time** = Output Tokens / Generation Speed - **Network Round Trip** = Client-to-server communication overhead ## Infrastructure Optimization ### Network Latency Analysis Network latency can significantly impact user-experienced performance. If client-measured total latency substantially exceeds server-side metrics returned in API responses, network optimization becomes critical.

**Diagnostic Approach**: **Response Header Analysis**: The `x-groq-region` header confirms which datacenter processed your request, enabling latency correlation with geographic proximity. This information helps you understand if your requests are being routed to the optimal datacenter for your location. ### Context Length Management As shown above, TTFT scales with input length. End users can employ several prompting strategies to optimize context usage and reduce latency: - **Prompt Chaining**: Decompose complex tasks into sequential subtasks where each prompt's output feeds the next. This technique reduces individual prompt length while maintaining context flow. Example: First prompt extracts relevant quotes from documents, second prompt answers questions using those quotes. Improves transparency and enables easier debugging. - **Zero-Shot vs Few-Shot Selection**: For concise, well-defined tasks, zero-shot prompting ("Classify this sentiment") minimizes context length while leveraging model capabilities. Reserve few-shot examples only when task-specific patterns are essential, as examples consume significant tokens. - **Strategic Context Prioritization**: Place critical information at prompt beginning or end, as models perform best with information in these positions. Use clear separators (triple quotes, headers) to structure complex prompts and help models focus on relevant sections.

For detailed implementation strategies and examples, consult the [Groq Prompt Engineering Documentation](/docs/prompting) and [Prompting Patterns Guide](/docs/prompting/patterns). ## Groq's Processing Options ### Service Tier Architecture Groq offers three service tiers that influence latency characteristics and processing behavior:

**On-Demand Processing** (`"service_tier":"on_demand"`): For real-time applications requiring guaranteed processing, the standard API delivers: - Industry-leading low latency with consistent performance - Streaming support for immediate perceived response - Controlled rate limits to ensure fairness and consistent experience **Flex Processing** (`"service_tier":"flex"`): [Flex Processing](/docs/flex-processing) optimizes for throughput with higher request volumes in exchange for occasional failures. Flex processing gives developers 10x their current rate limits, as system capacity allows, with rapid timeouts when resources are constrained. _Best for_: High-volume workloads, content pipelines, variable demand spikes.

**Auto Processing** (`"service_tier":"auto"`): Auto Processing uses on-demand rate limits initially, then automatically falls back to flex tier processing if those limits are exceeded. This provides optimal balance between guaranteed processing and high throughput. _Best for_: Applications requiring both reliability and scalability during demand spikes. ### Processing Tier Selection Logic ```python # Processing Tier Selection Logic if real_time_required and throughput_need != "high": return "on_demand" elif throughput_need == "high" and cost_priority != "critical": return "flex" elif real_time_required and throughput_need == "variable": return "auto" elif cost_priority == "critical": return "batch" else: return "on_demand" ``` ### Batch Processing [Batch Processing](/docs/batch) enables cost-effective asynchronous processing with a completion window, optimized for scenarios where immediate responses aren't required.

**Batch API Overview**: The Groq Batch API processes large-scale workloads asynchronously, offering significant advantages for high-volume use cases: - **Higher rate limits**: Process thousands of requests per batch with no impact on standard API rate limits - **Cost efficiency**: 50% cost discount compared to synchronous APIs - **Flexible processing windows**: 24-hour to 7-day completion timeframes based on workload requirements - **Rate limit isolation**: Batch processing doesn't consume your standard API quotas

**Latency Considerations**: While batch processing trades immediate response for efficiency, understanding its latency characteristics helps optimize workload planning: - **Submission latency**: Minimal overhead for batch job creation and validation - **Queue processing**: Variable based on system load and batch size - **Completion notification**: Webhook or polling-based status updates - **Result retrieval**: Standard API latency for downloading completed outputs

**Optimal Use Cases**: Batch processing excels for workloads where processing time flexibility enables significant cost and throughput benefits: large dataset analysis, content generation pipelines, model evaluation suites, and scheduled data enrichment tasks. ## Streaming Implementation ### Server-Sent Events Best Practices Implement streaming to improve perceived latency:

**Streaming Implementation**: ```python import os from groq import Groq def stream_response(prompt): client = Groq(api_key=os.environ.get("GROQ_API_KEY")) stream = client.chat.completions.create( model="meta-llama/llama-4-scout-17b-16e-instruct", messages=[{"role": "user", "content": prompt}], stream=True ) for chunk in stream: if chunk.choices[0].delta.content: yield chunk.choices[0].delta.content # Example usage with concrete prompt prompt = "Write a short story about a robot learning to paint in exactly 3 sentences." for token in stream_response(prompt): print(token, end='', flush=True) ``` ```javascript import Groq from "groq-sdk"; async function streamResponse(prompt) { const groq = new Groq({ apiKey: process.env.GROQ_API_KEY }); const stream = await groq.chat.completions.create({ model: "meta-llama/llama-4-scout-17b-16e-instruct", messages: [{ role: "user", content: prompt }], stream: true }); for await (const chunk of stream) { if (chunk.choices[0]?.delta?.content) { process.stdout.write(chunk.choices[0].delta.content); } } } // Example usage with concrete prompt const prompt = "Write a short story about a robot learning to paint in exactly 3 sentences."; streamResponse(prompt); ```

**Key Benefits**: - Users see immediate response initiation - Better user engagement and experience - Error handling during generation _Best for_: Interactive applications requiring immediate feedback, user-facing chatbots, real-time content generation where perceived responsiveness is critical. ## Next Steps Go over to our [Production-Ready Checklist](/docs/production-readiness/production-ready-checklist) and start the process of getting your AI applications scaled up to all your users with consistent performance.

Building something amazing? Need help optimizing? Our team is here to help you achieve production-ready performance at scale. Join our [developer community](https://community.groq.com)! --- ## Security Onboarding URL: https://console.groq.com/docs/production-readiness/security-onboarding # Security Onboarding Welcome to the **Groq Security Onboarding** guide. This page walks through best practices for protecting your API keys, securing client configurations, and hardening integrations before moving into production. ## Overview Security is a shared responsibility between Groq and our customers. While Groq ensures secure API transport and service isolation, customers are responsible for securing client-side configurations, keys, and data handling. All Groq API traffic is encrypted in transit using TLS 1.2+ and authenticated via API keys. ## Secure API Key Management Never expose or hardcode API keys directly into your source code. Use environment variables or a secret management system. **Warning:** Never embed keys in frontend code or expose them in browser bundles. If you need client-side usage, route through a trusted backend proxy. ## Key Rotation & Revocation * Rotate API keys periodically (e.g., quarterly). * Revoke keys immediately if compromise is suspected. * Use per-environment keys (dev / staging / prod). * Log all API key creations and deletions. ## Transport Security (TLS) Groq APIs enforce HTTPS (TLS 1.2 or higher). You should **never** disable SSL verification. ## Input and Prompt Safety When integrating Groq into user-facing systems, ensure that user inputs cannot trigger prompt injection or tool misuse. **Recommendations:** * Sanitize user input before embedding in prompts. * Avoid exposing internal system instructions or hidden context. * Validate model outputs (especially JSON / code / commands). * Limit model access to safe tools or actions only. ## Rate Limiting and Retry Logic Implement client-side rate limiting and exponential backoff for 429 / 5xx responses. ## Logging & Monitoring Maintain structured logs for all API interactions. **Include:** * Timestamp * Endpoint * Request latency * Key / service ID (non-secret) * Error codes **Tip:** Avoid logging sensitive data or raw model responses containing user information. ## Secure Tool Use & Agent Integrations When using Groq's **Tool Use** or external function execution features: * Expose only vetted, sandboxed tools. * Restrict external network calls. * Audit all registered tools and permissions. * Validate arguments and outputs. ## Incident Response If you suspect your API key is compromised: 1. Revoke the key immediately from the [Groq Console](https://console.groq.com/keys). 2. Rotate to a new key and redeploy secrets. 3. Review logs for suspicious activity. 4. Notify your security admin. **Warning:** Never reuse compromised keys, even temporarily. ## Resources - [Groq API Documentation](/docs/api-reference) - [Prompt Engineering Guide](/docs/prompting) - [Understanding and Optimizing Latency](/docs/production-readiness/optimizing-latency) - [Production-Ready Checklist](/docs/production-readiness/production-ready-checklist) - [Groq Developer Community](https://community.groq.com) - [OpenBench](https://openbench.dev)

*This security guide should be customized based on your specific application requirements and updated based on production learnings.* --- ## Production-Ready Checklist for Applications on GroqCloud URL: https://console.groq.com/docs/production-readiness/production-ready-checklist # Production-Ready Checklist for Applications on GroqCloud Deploying LLM applications to production involves critical decisions that directly impact user experience, operational costs, and system reliability. **This comprehensive checklist** guides you through the essential steps to launch and scale your Groq-powered application with confidence. From selecting the optimal model architecture and configuring processing tiers to implementing robust monitoring and cost controls, each section addresses the common pitfalls that can derail even the most promising LLM applications. ## Pre-Launch Requirements ### Model Selection Strategy * Document latency requirements for each use case * Test quality/latency trade-offs across model sizes * Reference the Model Selection Workflow in the Latency Optimization Guide ### Prompt Engineering Optimization * Optimize prompts for token efficiency using context management strategies * Implement prompt templates with variable injection * Test structured output formats for consistency * Document optimization results and token savings ### Processing Tier Configuration * Reference the Processing Tier Selection Workflow in the Latency Optimization Guide * Implement retry logic for Flex Processing failures * Design callback handlers for Batch Processing ## Performance Optimization ### Streaming Implementation * Test streaming vs non-streaming latency impact and user experience * Configure appropriate timeout settings * Handle streaming errors gracefully ### Network and Infrastructure * Measure baseline network latency to Groq endpoints * Configure timeouts based on expected response lengths * Set up retry logic with exponential backoff * Monitor API response headers for routing information ### Load Testing * Test with realistic traffic patterns * Validate linear scaling characteristics * Test different processing tier behaviors * Measure TTFT and generation speed under load ## Monitoring and Observability ### Key Metrics to Track * **TTFT percentiles** (P50, P90, P95, P99) * **End-to-end latency** (client to completion) * **Token usage and costs** per endpoint * **Error rates** by processing tier * **Retry rates** for Flex Processing (less then 5% target) ### Alerting Setup * Set up alerts for latency degradation (>20% increase) * Monitor error rates (alert if >0.5%) * Track cost increases (alert if >20% above baseline) * Use Groq Console for usage monitoring ## Cost Optimization ### Usage Monitoring * Track token efficiency metrics * Monitor cost per request across different models * Set up cost alerting thresholds * Analyze high-cost endpoints weekly ### Optimization Strategies * Leverage smaller models where quality permits * Use Batch Processing for non-urgent workloads (50% cost savings) * Implement intelligent processing tier selection * Optimize prompts to reduce input/output tokens ## Launch Readiness ### Final Validation * Complete end-to-end testing with production-like loads * Test all failure scenarios and error handling * Validate cost projections against actual usage * Verify monitoring and alerting systems * Test graceful degradation strategies ### Go-Live Preparation * Define gradual rollout plan * Document rollback procedures * Establish performance baselines * Define success metrics and SLAs ## Post-Launch Optimization ### First Week * Monitor all metrics closely * Address any performance issues immediately * Fine-tune timeout and retry settings * Gather user feedback on response quality and speed ### First Month * Review actual vs projected costs * Optimize high-frequency prompts based on usage patterns * Evaluate processing tier effectiveness * A/B test prompt optimizations * Document optimization wins and lessons learned ## Key Performance Targets | Metric | Target | Alert Threshold | |--------|--------|-----------------| | TTFT P95 | Model-dependent* | >20% increase | | Error Rate | <0.1% | >0.5% | | Flex Retry Rate | <5% | >10% | | Cost per 1K tokens | Baseline | +20% | *Reference [Artificial Analysis](https://artificialanalysis.ai/providers/groq) for current model benchmarks ## Resources - [Groq API Documentation](/docs/api-reference) - [Prompt Engineering Guide](/docs/prompting) - [Understanding and Optimizing Latency on Groq](/docs/production-readiness/optimizing-latency) - [Groq Developer Community](https://community.groq.com) - [OpenBench](https://openbench.dev)

--- *This checklist should be customized based on your specific application requirements and updated based on production learnings.* --- ## Quickstart: Performing Chat Completion (json) URL: https://console.groq.com/docs/quickstart/scripts/performing-chat-completion.json { "messages": [ { "role": "user", "content": "Explain the importance of fast language models" } ], "model": "llama-3.3-70b-versatile" } --- ## Quickstart: Quickstart Ai Sdk (js) URL: https://console.groq.com/docs/quickstart/scripts/quickstart-ai-sdk ```javascript import Groq from "groq-sdk"; const groq = new Groq({ apiKey: process.env.GROQ_API_KEY }); export async function main() { const chatCompletion = await getGroqChatCompletion(); // Print the completion returned by the LLM. console.log(chatCompletion.choices[0]?.message?.content || ""); } export async function getGroqChatCompletion() { return groq.chat.completions.create({ messages: [ { role: "user", content: "Explain the importance of fast language models", }, ], model: "openai/gpt-oss-20b", }); } ``` --- ## Quickstart: Performing Chat Completion (py) URL: https://console.groq.com/docs/quickstart/scripts/performing-chat-completion.py ```python import os from groq import Groq client = Groq( api_key=os.environ.get("GROQ_API_KEY"), ) chat_completion = client.chat.completions.create( messages=[ { "role": "user", "content": "Explain the importance of fast language models", } ], model="llama-3.3-70b-versatile", ) print(chat_completion.choices[0].message.content) ``` --- ## Quickstart: Performing Chat Completion (js) URL: https://console.groq.com/docs/quickstart/scripts/performing-chat-completion ```javascript import Groq from "groq-sdk"; const groq = new Groq({ apiKey: process.env.GROQ_API_KEY }); export async function main() { const chatCompletion = await getGroqChatCompletion(); // Print the completion returned by the LLM. console.log(chatCompletion.choices[0]?.message?.content || ""); } export async function getGroqChatCompletion() { return groq.chat.completions.create({ messages: [ { role: "user", content: "Explain the importance of fast language models", }, ], model: "openai/gpt-oss-20b", }); } ``` --- ## Quickstart URL: https://console.groq.com/docs/quickstart # Quickstart Get up and running with the Groq API in a few minutes, with the steps below. For additional support, catch our [onboarding video](/docs/overview). ## Create an API Key Please visit [here](/keys) to create an API Key. ## Set up your API Key (recommended) Configure your API key as an environment variable. This approach streamlines your API usage by eliminating the need to include your API key in each request. Moreover, it enhances security by minimizing the risk of inadvertently including your API key in your codebase. ### In your terminal of choice: ```shell export GROQ_API_KEY=

First, install the `ai` package and the Groq provider `@ai-sdk/groq`:

```shell pnpm add ai @ai-sdk/groq ```

Then, you can use the Groq provider to generate text. By default, the provider will look for `GROQ_API_KEY` as the API key.

```js // (example JavaScript code) ``` ### Using LiteLLM: [LiteLLM](https://www.litellm.ai/) is both a Python-based open-source library, and a proxy/gateway server that simplifies building large language model (LLM) applications. Documentation for LiteLLM [can be found here](https://docs.litellm.ai/).

First, install the `litellm` package:

```python pip install litellm ```

Then, set up your API key:

```python export GROQ_API_KEY="your-groq-api-key" ```

Now you can easily use any model from Groq. Just set `model=groq/

```python # (example Python code) ``` ### Using LangChain: [LangChain](https://www.langchain.com/) is a framework for developing reliable agents and applications powered by large language models (LLMs). Documentation for LangChain [can be found here for Python](https://python.langchain.com/docs/introduction/), and [here for Javascript](https://js.langchain.com/docs/introduction/).

When using Python, first, install the `langchain` package:

```python pip install langchain-groq ```

Then, set up your API key:

```python export GROQ_API_KEY="your-groq-api-key" ```

Now you can build chains and agents that can perform multi-step tasks. This chain combines a prompt that tells the model what information to extract, a parser that ensures the output follows a specific JSON format, and llama-3.3-70b-versatile to do the actual text processing.

```python # (example Python code) ``` Now that you have successfully received a chat completion, you can try out the other endpoints in the API. ### Next Steps - Check out the [Playground](/playground) to try out the Groq API in your browser - Join our GroqCloud [developer community](https://community.groq.com/) - Add a how-to on your project to the [Groq API Cookbook](https://github.com/groq/groq-api-cookbook) --- ## Structured Outputs: Email Classification (py) URL: https://console.groq.com/docs/structured-outputs/scripts/email-classification.py from groq import Groq from pydantic import BaseModel import json client = Groq() class KeyEntity(BaseModel): entity: str type: str class EmailClassification(BaseModel): category: str priority: str confidence_score: float sentiment: str key_entities: list[KeyEntity] suggested_actions: list[str] requires_immediate_attention: bool estimated_response_time: str response = client.chat.completions.create( model="moonshotai/kimi-k2-instruct-0905", messages=[ { "role": "system", "content": "You are an email classification expert. Classify emails into structured categories with confidence scores, priority levels, and suggested actions.", }, {"role": "user", "content": "Subject: URGENT: Server downtime affecting production\\n\\nHi Team,\\n\\nOur main production server went down at 2:30 PM EST. Customer-facing services are currently unavailable. We need immediate action to restore services. Please join the emergency call.\\n\\nBest regards,\\nDevOps Team"}, ], response_format={ "type": "json_schema", "json_schema": { "name": "email_classification", "schema": EmailClassification.model_json_schema() } } ) email_classification = EmailClassification.model_validate(json.loads(response.choices[0].message.content)) print(json.dumps(email_classification.model_dump(), indent=2)) --- ## Structured Outputs: Sql Query Generation (js) URL: https://console.groq.com/docs/structured-outputs/scripts/sql-query-generation ```javascript import Groq from "groq-sdk"; const groq = new Groq(); const response = await groq.chat.completions.create({ model: "moonshotai/kimi-k2-instruct-0905", messages: [ { role: "system", content: "You are a SQL expert. Generate structured SQL queries from natural language descriptions with proper syntax validation and metadata.", }, { role: "user", content: "Find all customers who made orders over $500 in the last 30 days, show their name, email, and total order amount" }, ], response_format: { type: "json_schema", json_schema: { name: "sql_query_generation", schema: { type: "object", properties: { query: { type: "string" }, query_type: { type: "string", enum: ["SELECT", "INSERT", "UPDATE", "DELETE", "CREATE", "ALTER", "DROP"] }, tables_used: { type: "array", items: { type: "string" } }, estimated_complexity: { type: "string", enum: ["low", "medium", "high"] }, execution_notes: { type: "array", items: { type: "string" } }, validation_status: { type: "object", properties: { is_valid: { type: "boolean" }, syntax_errors: { type: "array", items: { type: "string" } } }, required: ["is_valid", "syntax_errors"], additionalProperties: false } }, required: ["query", "query_type", "tables_used", "estimated_complexity", "execution_notes", "validation_status"], additionalProperties: false } } } }); const result = JSON.parse(response.choices[0].message.content || "{}"); console.log(result); ``` --- ## Structured Outputs: File System Schema (json) URL: https://console.groq.com/docs/structured-outputs/scripts/file-system-schema.json { "type": "object", "properties": { "file_system": { "$ref": "#/$defs/file_node" } }, "$defs": { "file_node": { "type": "object", "properties": { "name": { "type": "string", "description": "File or directory name" }, "type": { "type": "string", "enum": ["file", "directory"] }, "size": { "type": "number", "description": "Size in bytes (0 for directories)" }, "children": { "anyOf": [ { "type": "array", "items": { "$ref": "#/$defs/file_node" } }, { "type": "null" } ] } }, "additionalProperties": false, "required": ["name", "type", "size", "children"] } }, "additionalProperties": false, "required": ["file_system"] } --- ## Structured Outputs: Appointment Booking Schema (json) URL: https://console.groq.com/docs/structured-outputs/scripts/appointment-booking-schema.json { "name": "book_appointment", "description": "Books a medical appointment", "strict": true, "schema": { "type": "object", "properties": { "patient_name": { "type": "string", "description": "Full name of the patient" }, "appointment_type": { "type": "string", "description": "Type of medical appointment", "enum": ["consultation", "checkup", "surgery", "emergency"] } }, "additionalProperties": false, "required": ["patient_name", "appointment_type"] } } --- ## Structured Outputs: Task Creation Schema (json) URL: https://console.groq.com/docs/structured-outputs/scripts/task-creation-schema.json { "name": "create_task", "description": "Creates a new task in the project management system", "strict": true, "parameters": { "type": "object", "properties": { "title": { "type": "string", "description": "The task title or summary" }, "priority": { "type": "string", "description": "Task priority level", "enum": ["low", "medium", "high", "urgent"] } }, "additionalProperties": false, "required": ["title", "priority"] } } --- ## Structured Outputs: Support Ticket Zod.doc (ts) URL: https://console.groq.com/docs/structured-outputs/scripts/support-ticket-zod.doc ```javascript import Groq from "groq-sdk"; import { z } from "zod"; const groq = new Groq(); const supportTicketSchema = z.object({ category: z.enum(["api", "billing", "account", "bug", "feature_request", "integration", "security", "performance"]), priority: z.enum(["low", "medium", "high", "critical"]), urgency_score: z.number(), customer_info: z.object({ name: z.string(), company: z.string().optional(), tier: z.enum(["free", "paid", "enterprise", "trial"]) }), technical_details: z.array(z.object({ component: z.string(), error_code: z.string().optional(), description: z.string() })), keywords: z.array(z.string()), requires_escalation: z.boolean(), estimated_resolution_hours: z.number(), follow_up_date: z.string().datetime().optional(), summary: z.string() }); type SupportTicket = z.infer